-

We've seen examples of “good” algorithms to solve a

linear system (,

)

-

The above find a solution using , FLOPS

-

Consider now an example of a “bad” algorithm, known as

Cramer's rule

-

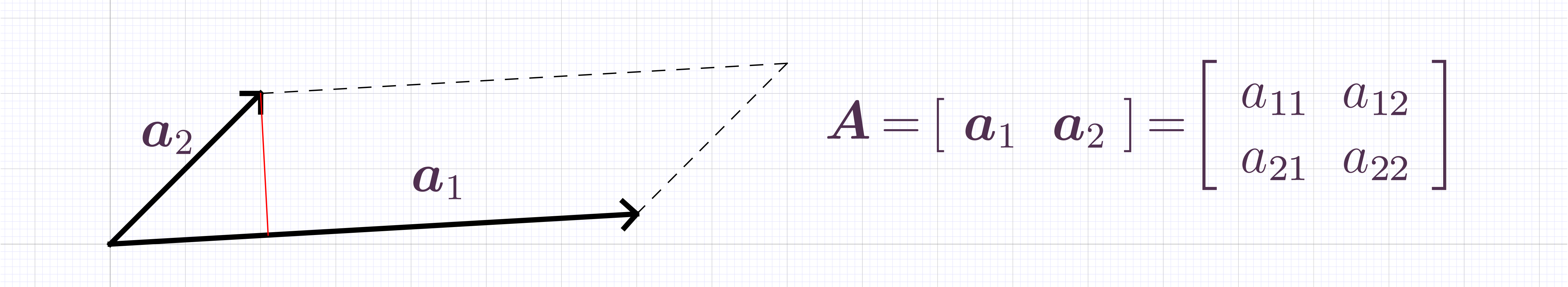

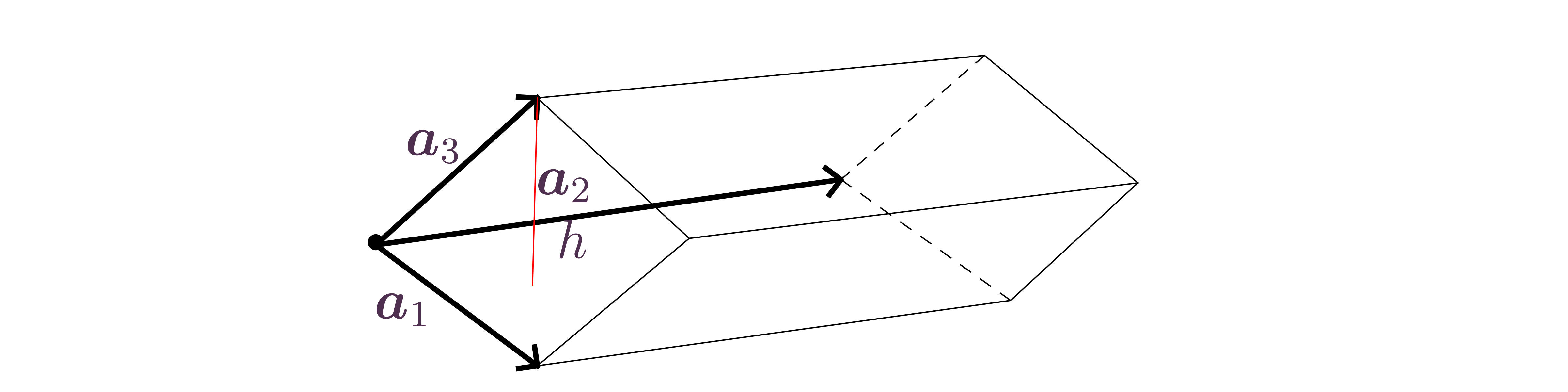

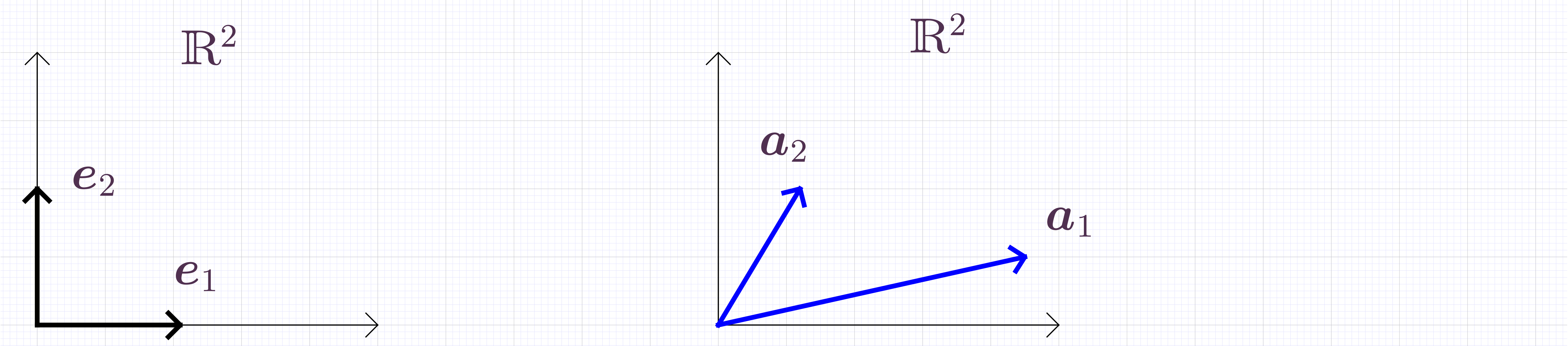

is the principal determinant of the

linear system

-

arises from minors of

-

Why bad? It requires computation of

determinants, each of which costs leading to overall

-

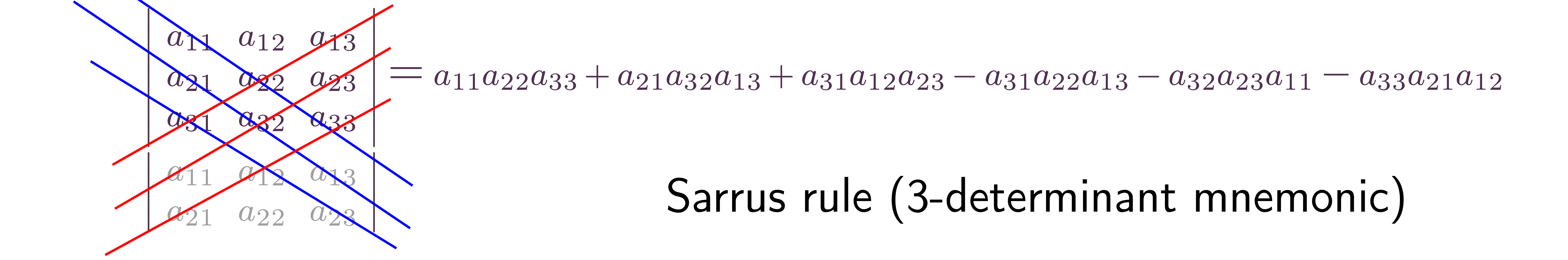

Could be even worse! Before ubiquity of computers, determinants

were often computed by the algebraic definition

There are

terms in the sum. Each term costs

flops. Using this in Cramer's rule gives an incredibly large

number for even small .