MATH347 L20: Least squares applications

|

-

New concepts:

-

Polynomial interpolation

-

LSQ polynomial approximant

-

MATH347 L20: Least squares applications

|

New concepts:

Polynomial interpolation

LSQ polynomial approximant

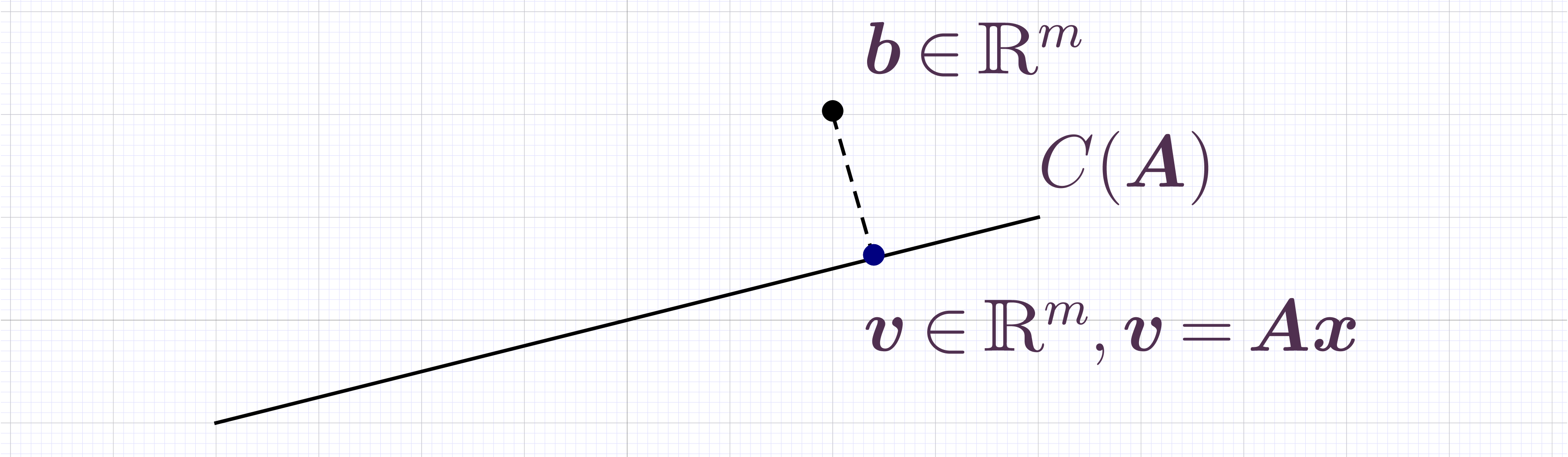

Recall: least squares problem formulation/solution

|

Consider approximating by linear combination of vectors,

Make approximation error as small as possible

Error is measured in the 2-norm the least squares problem (LSQ)

Solution is the projection of onto

The vector is found by back-substitution from

Applications: polynomial approximations

|

LSQ has myriad applications throughout science

Consider data

The polynomial interpolant of degree passes through the data points

Impose conditions to obtain linear system

>> |

m=4; x=transpose(1:m); y=1 - 2*x + 3*x.^2 - 4*x.^3; |

>> |

A=[x.^0 x.^1 x.^2 x.^3]; [Q,R]=qr(A); c = R \ (Q'*y); c' |

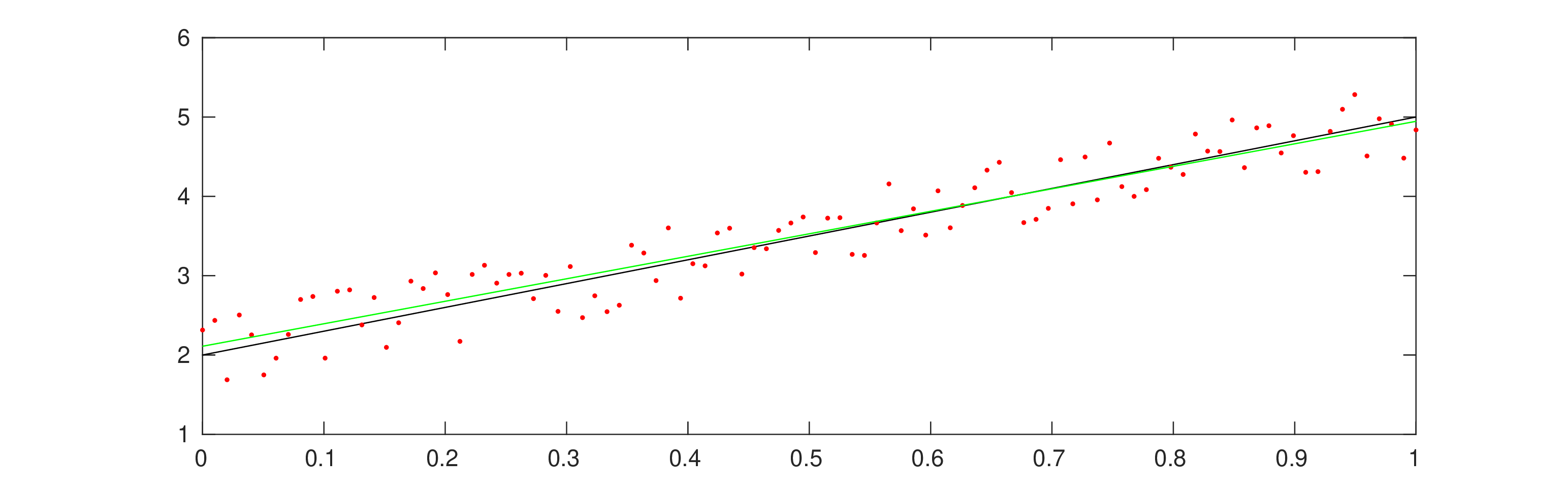

LSQ polynomial approximant - first degree (linear regression)

|

Consider noisy data containing many measurements

Assume data without noise would conform to some polynomial law

with a random number uniformly distributed within .

>> |

m=100; x=linspace(0,1,m)'; |

>> |

c0=2; c1=3; z=c0+c1*x; y=(z+rand(m,1)-0.5); |

>> |

A=ones(m,2); A(:,2)=x(:); [Q,R]=qr(A); c = R \ (Q'*y); c' |

>> |

w=A*c; plot(x,z,'k',x,y,'.r',x,w,'g'); |

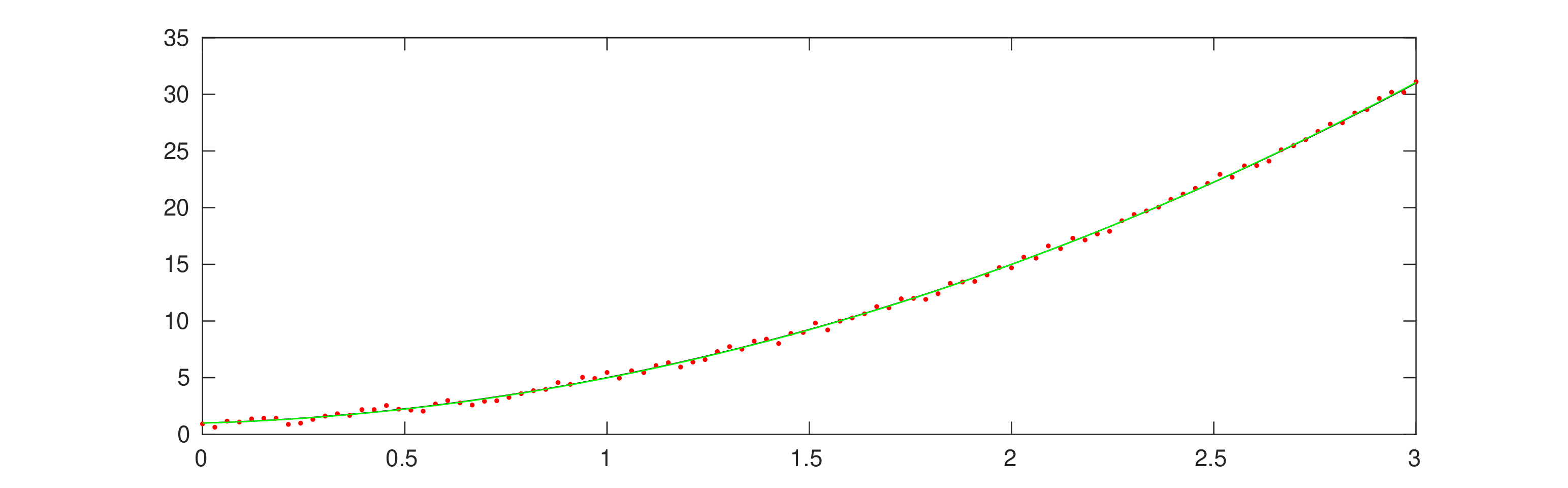

LSQ polynomial approximant - second degree

|

Consider noisy data containing many measurements

Assume data without noise would conform to some polynomial law

with a random number uniformly distributed within .

>> |

m=100; x=linspace(0,3,m)'; |

>> |

c0=1; c1=1; c2=3; z=c0+c1*x+c2*x.^2; y=(z+rand(m,1)-0.5); |

>> |

A=ones(m,3); A(:,2)=x(:); A(:,3)=x(:).^2; [Q,R]=qr(A); c=R\(Q'*y); c' |

>> |

w=A*c; plot(x,z,'k',x,y,'.r',x,w,'g'); |