MATH347 L23: Schur, singular value decompositions

|

-

New concepts:

-

An orthogonal eigenvalue-revealing decomposition: Schur

-

Computability

-

Non-computability of polynomial roots

-

The need for an additional orthogonal decomposition

-

Interpreting the eigenvalue decomposition

-

Motivating the singular value decomposition

-

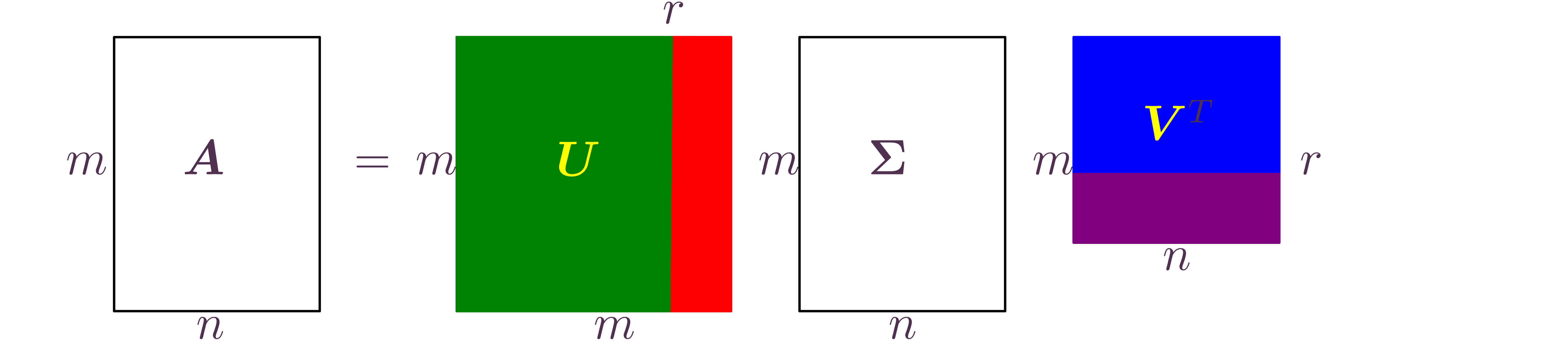

The singular value decomposition (SVD)

-