MATH347 Course review

|

-

What is linear algebra and why is to so important to so many applications?

-

Basic operations: defined to express linear combination

-

Linear operators, Fundamental Theorem of Linear Algebra (FTLA)

-

Factorizations: more convenient expression of linear combination

\(\displaystyle \boldsymbol{L}\boldsymbol{U}=\boldsymbol{A}, \boldsymbol{Q}\boldsymbol{R}=\boldsymbol{A}, \boldsymbol{X}\boldsymbol{\Lambda}\boldsymbol{X}^{- 1} =\boldsymbol{A}, \boldsymbol{U}\boldsymbol{\Sigma}\boldsymbol{V}^T =\boldsymbol{A}\) -

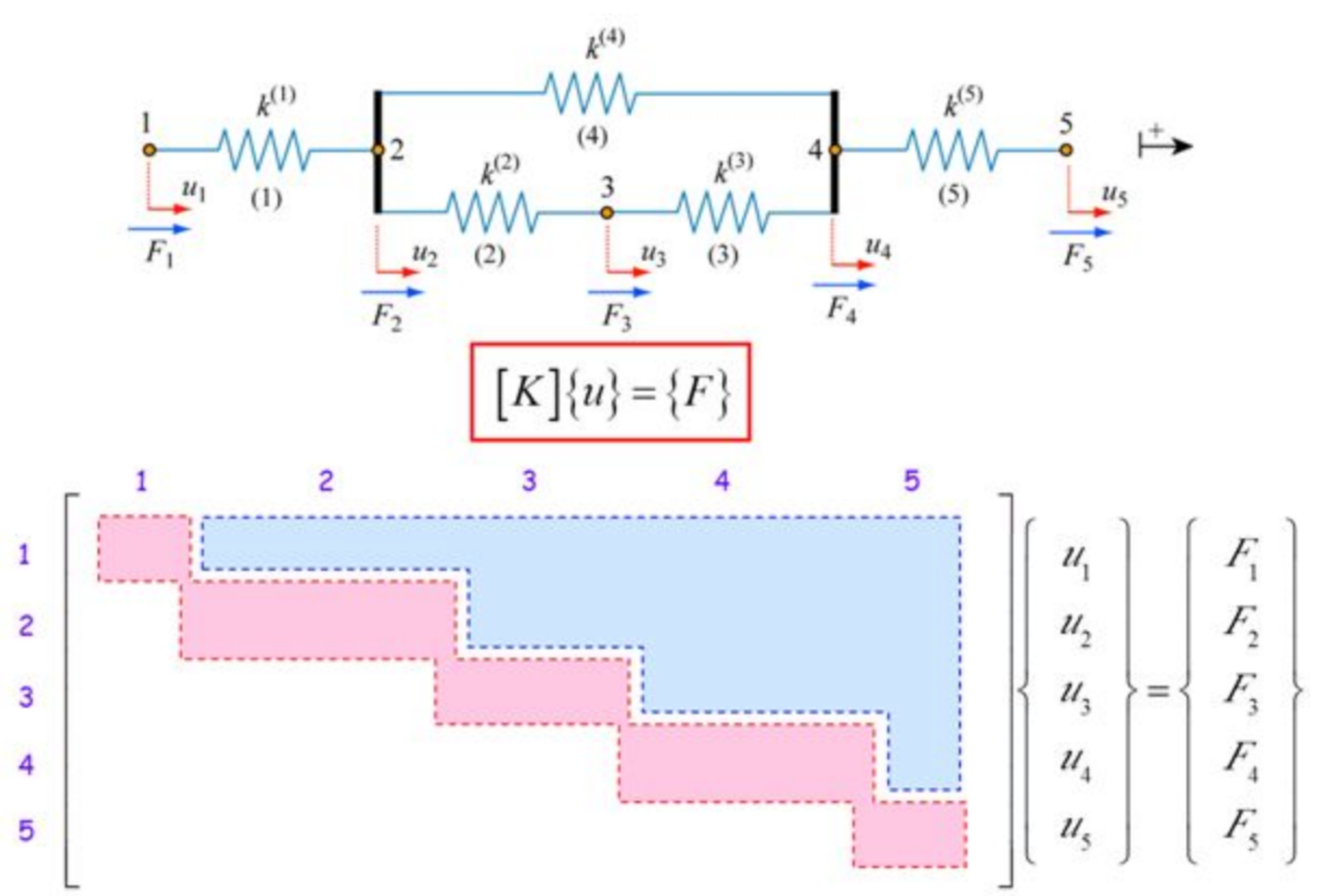

Solving linear systems (change of basis) \(\boldsymbol{I}\boldsymbol{b}=\boldsymbol{A}\boldsymbol{x}\)

-

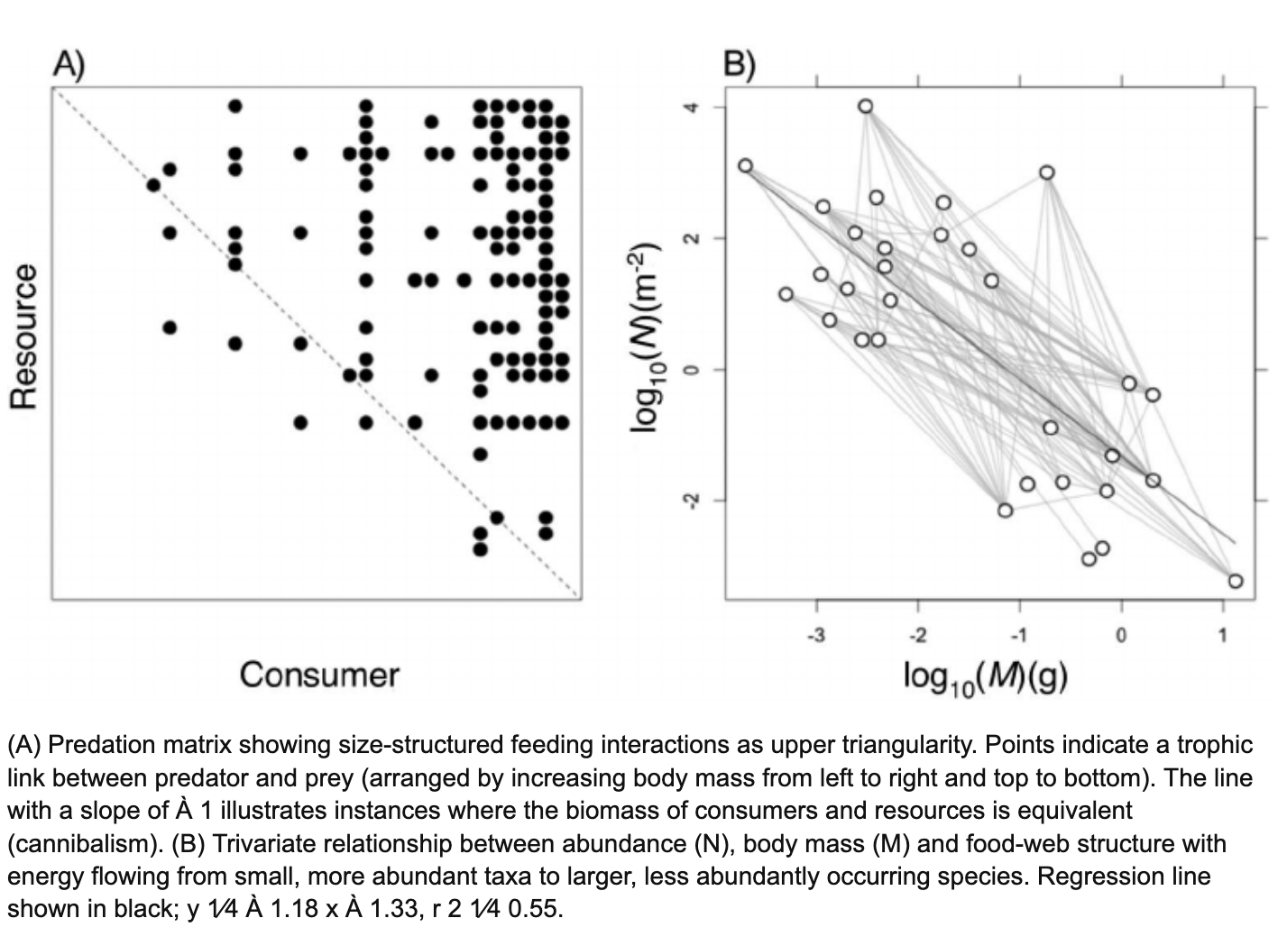

Best 2-norm approximation: least squares \(\min_{\boldsymbol{x}} \| \boldsymbol{b}-\boldsymbol{A}\boldsymbol{x} \|_2\)

-

Exposing the structure of a linear operator between the same sets through eigendecomposition

-

Exposing the structure of a linear operator between different sets through the SVD

-

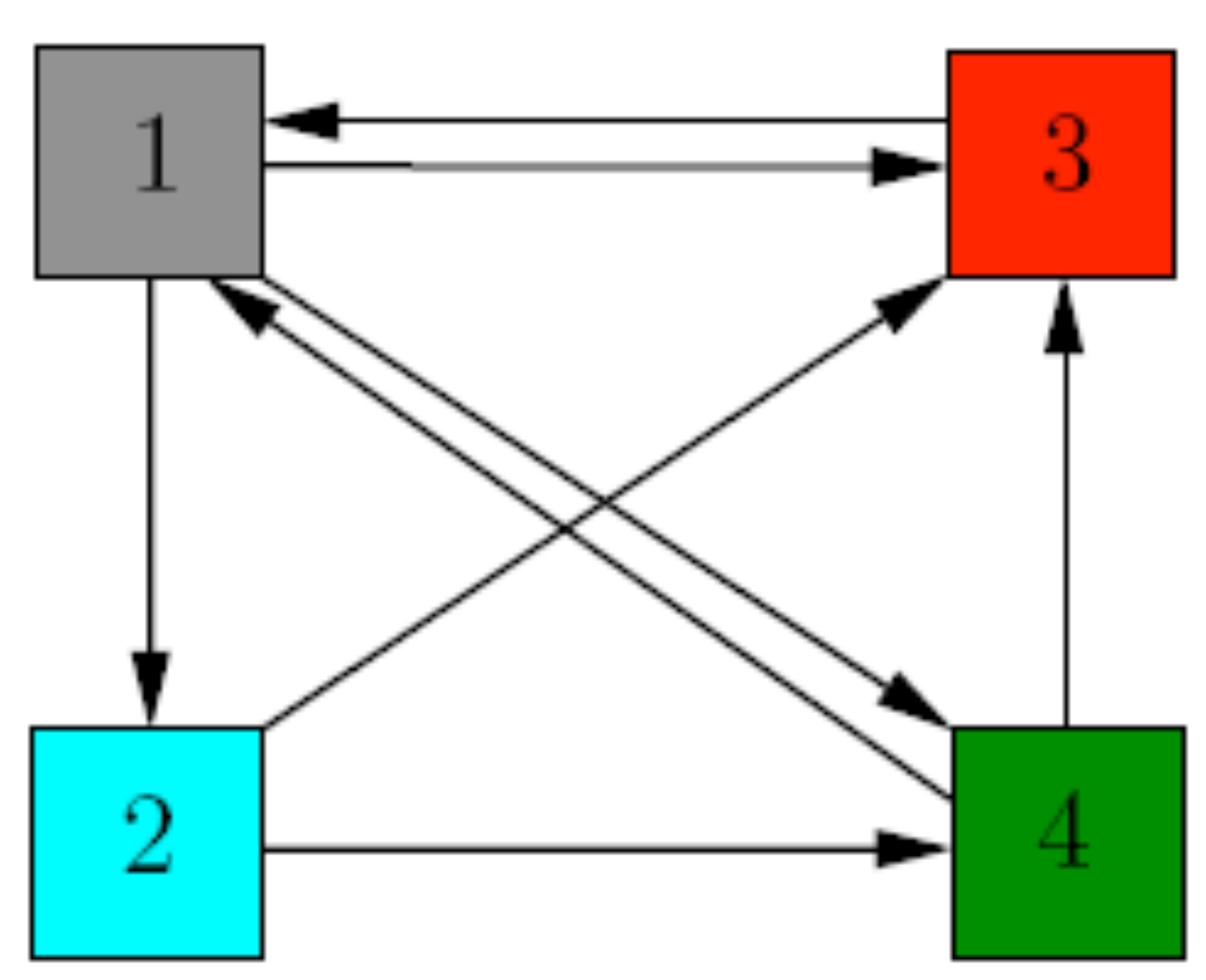

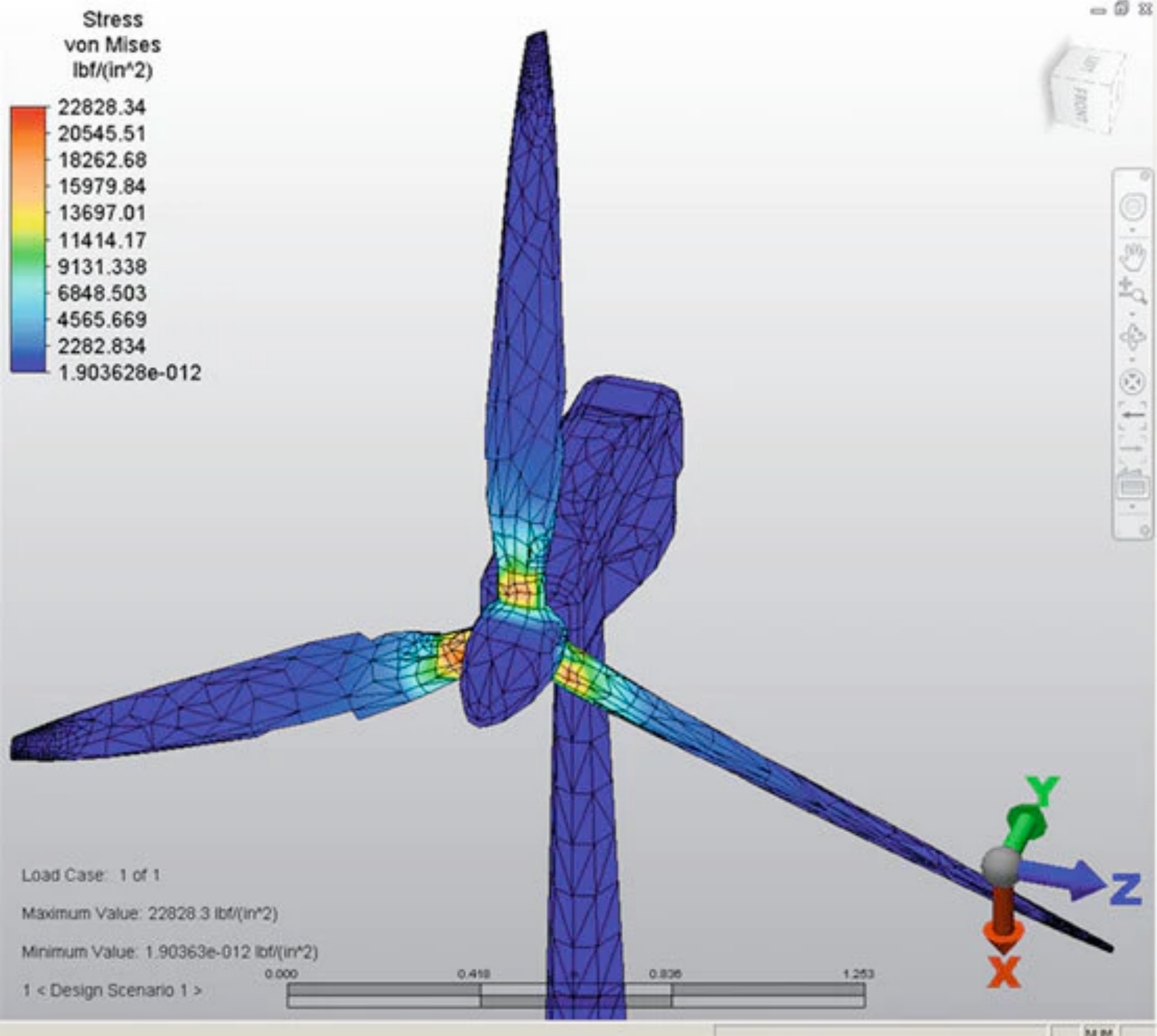

Applications: any type of correlation