|

The examples below are of complexity similar to the problems in Homework 7. Please note that even in these small dimensional cases the arithmetic gets tiresome, hiding the simplicity of the solution by projection:

The intent of Homework 7 is to:

Gain familiarity with the Gram-Schmidt algorithm;

Understand the steps in solving the least squares problem by projection;

Understand the necessity of working with symbolic representations of vectors and matrices, leaving the arithmetic to software systems (Matlab/Octave).

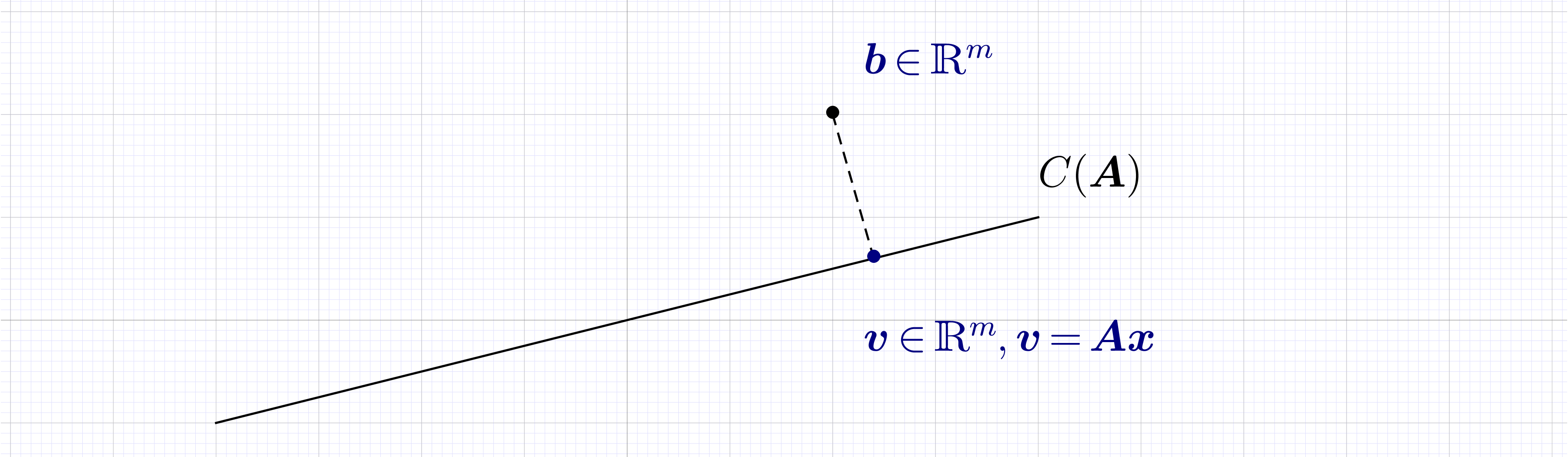

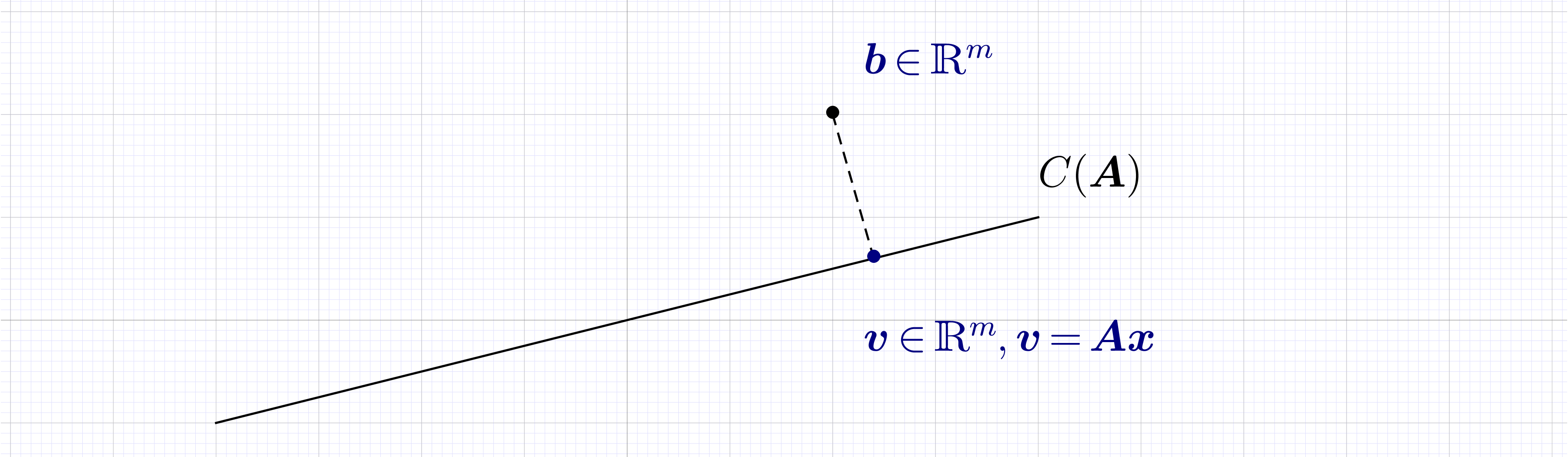

and seeks to find the scaling coefficients required to most closely approximate by linear combination of the columns of .

The solution is found by orthogonal projection of onto the column space of . It is efficient to define an orthonormal basis for the column space of through the column vectors of

computed using the Gram-Schmidt algorithm (see below). Read to state: “linear combinations of the columns of can be formed to obtain the column vectors of ”. Therefore projectiing onto the column space of is the same as projecting onto the column space of and the projection matrix is

where is a matrix with mutually orthogonal columns of unit norm, and . The matrix is (see below).

The projection of onto is a vector that (since it's in the column space of ) can also be expressed as

This leads to

Algorithm

Given vectors

Initialize ,..,,

for to

; if skip to next

for +1 to

;

end

end

return

Note that if ( is in the column space of ) then and

stating that the projection of is itself. In this case the least squares problem has a solution such that exactly.

Matrix with linearly independent columns, not in the column space

Solution. Note that the column vectors are orthogonal

Remember to first look at a problem before blindly carrying out calculations. In this case is found by scaling each column vector by its norm

and the matrix is a diagonal matrix containing the norms

Verify:

Now that the factorization has been found, here are the steps to solve the least squares problem.

Find the vector

Solve the system

Find

Computer solution (useful as verification). The above calculations can also be carried out in Matlab/Octave

>> |

A=[1 -1; 0 0; 1 1]; [Q,R]=qr(A,0) |

>> |

Q |

>> |

R |

>> |

Note that Matlab/Octave returned orthogonal vectors in the opposite orientation, highlighting that there are multiple ways to orthonormalize the columns of .

In Matlab/Octave the least squares solution is returned by the backslash operator

>> |

b=[1; 1; 1]; x=A\b; disp(x) |

>> |

Matrix with linearly independent columns, in the column space

Solution. Note that is a simple scaling of the first column of

This example again highlights the need to first look at a problem before blindly carrying out calculations.

Matrix with linearly independent columns, not in the column space

Solution. In this case the Gram-Schmidt algorithm is applied to find the factorization of . Here are the GS steps:

GS1. Find

GS2. Find . This is carried out in two sub-steps:

Subtract from its component along the previously determined direction

This vector is orthogonal to . Verify

Divide the resulting vector by its norm

Verify:

Here are the steps to solve the least squares problem.

Find the vector

Solve the system

Find

Computer solution (useful as verification). The above calculations can also be carried out in Matlab/Octave

>> |

A=[1 -1; 1 2; 1 1]; [Q,R]=qr(A,0) |

>> |

Q |

>> |

R |

In Matlab/Octave the least squares solution is returned by the backslash operator

>> |

b=[1; 2; 1]; x=A\b; disp(x) |

>> |

[24/21; 6/21] |

>> |