Overview

-

FTLA-associated definitions and results

-

Main problems of linear algebra

-

Homework review

-

factorization (Gram-Schmidt algorithm)

-

Projection

-

Least-squares approach to polynomial approximation

-

Set partition =

collection of

disjoint subsets covering the entire set

-

Partitioning of vector spaces: similar allow as a common element

-

,

,

-

Relationships of subspaces associated with

When is a vector space sum a direct sum?

|

|

Lemma 1.

Let ,

be subspaces of vector space .

Then

if and only if

-

,

and

-

.

Proof.

by definition of direct sum, sum of vector subspaces. To prove that

,

consider .

Since

and

write

and since expression

is unique, it results that .

Now assume (i),(ii) and establish an unique decomposition. Assume

there might be two decompositions of ,

,

,

with ,

Obtain ,

or .

Since

and

it results that ,

and ,

,

i.e., the decomposition is unique.

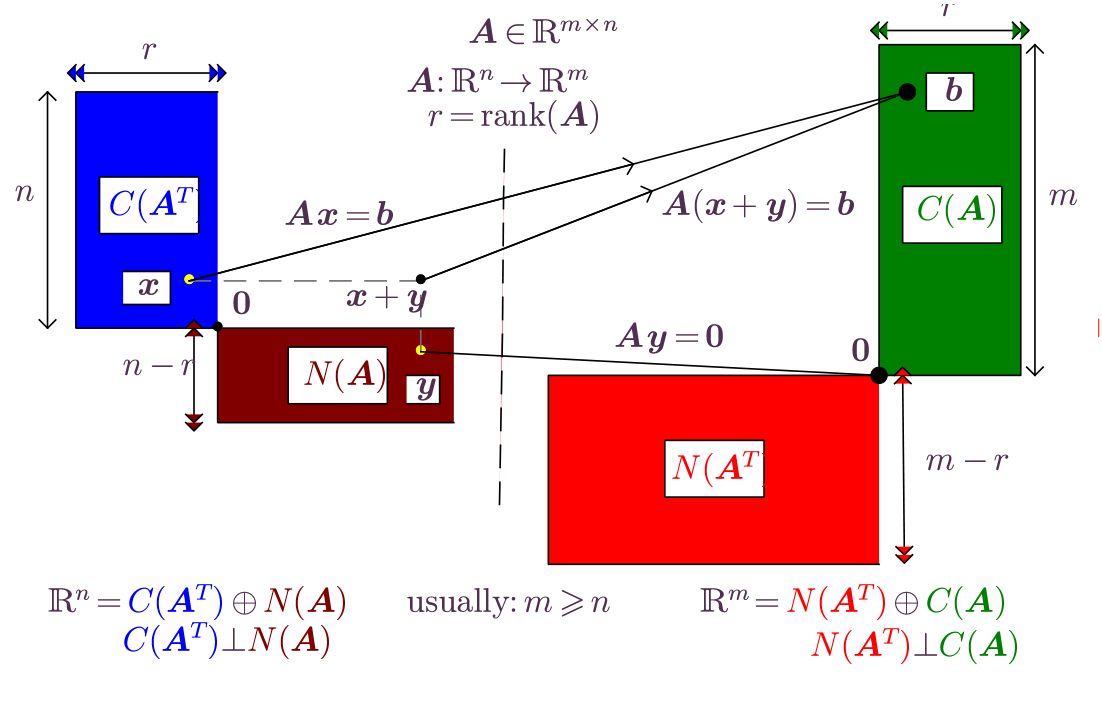

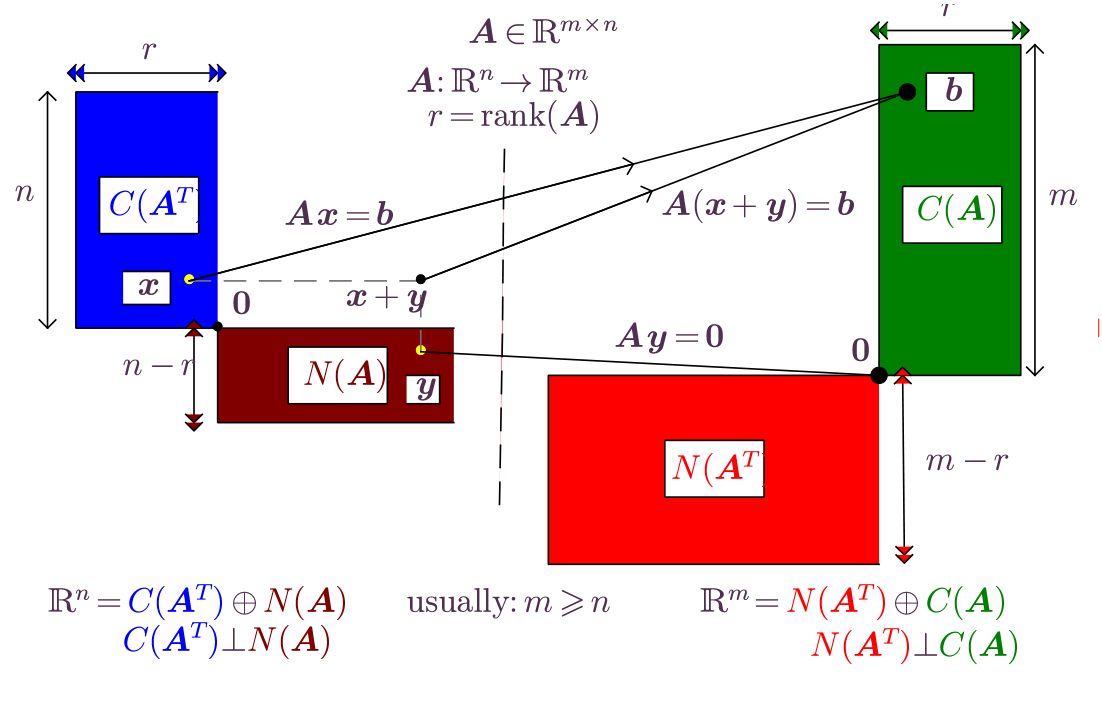

Theorem.

Given the linear mapping associated with

matrix

we have:

-

,

the direct sum of the column space and left null space is the

codomain of the mapping

-

,

the direct sum of the row space and null space is the domain of

the mapping

-

and , the column space is

orthogonal to the left null space, and they are orthogonal

complements of one another,

-

and , the row space is

orthogonal to the null space, and they are orthogonal

complements of one another,

FTLA: a graphical representation

|

|

|

|

Figure 1. Graphical representation of the

Fundamental Theorem of Linear Algebra, Gil Strang, Amer.

Math. Monthly 100, 848-855, 1993.

|

FTLA proof highlights the properties of a norm, especially .

-

(column space is

orthogonal to left null space).

Proof.

Consider arbitrary .

By definition of ,

such that ,

and by definition of ,

.

Compute ,

hence

for arbitrary ,

and .

-

( is the only vector both in

and ).

Proof.

(By contradiction, reductio ad

absurdum). Assume there might be

and

and .

Since ,

such that .

Since ,

.

Note that

since ,

contradicting assumptions. Multiply equality

on left by ,

thereby obtaining ,

using norm property 3. Contradiction.

-

established that

are orthogonal complements

By Lemma 2 it results that .

-

Second part of FTLA: as above for

FTLA: useful concepts and results

|

|

Definition.

The rank of matrix

is the dimension of the column space,

Definition.

The nullity of

is the dimension of the null space,

Proposition.

The dimension of the column space equals the

dimension of the row space.

Corollary.

The system ,

,

,

has a solution if .

The solution is unique if .

Definition.

The Dirac delta symbol

is defined as

Definition.

A set of vectors is said to be orthonormal

if

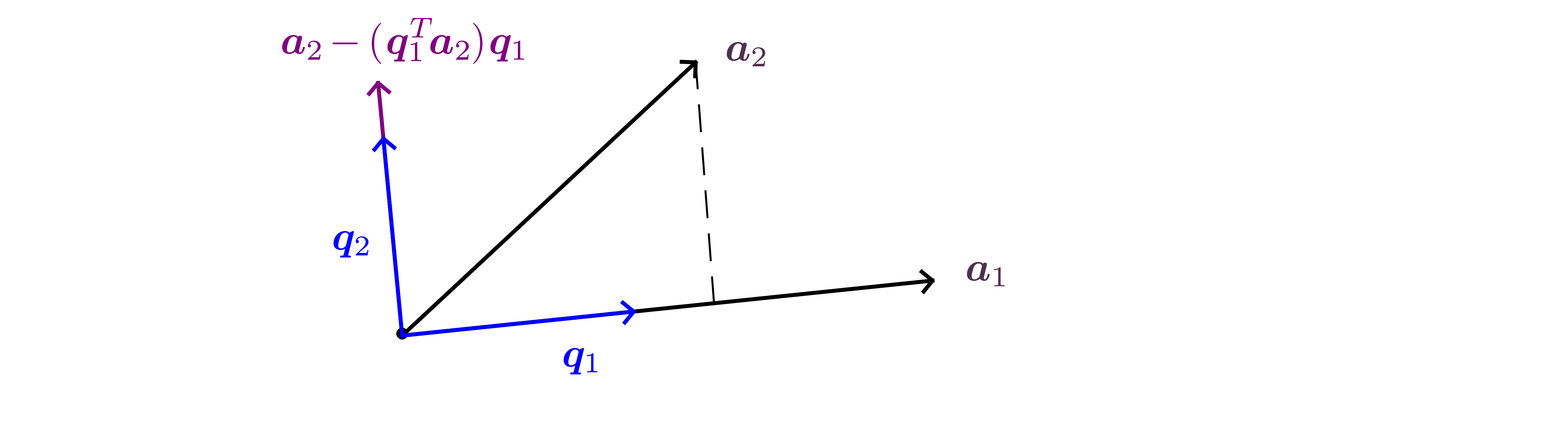

Matrix formulation of Gram-Schmidt (

factorization)

|

|

-

Consider

with linearly independent columns. By linear combinations of the

columns of a

set of orthonormal vectors

will be obtained. This can be expressed as a matrix product

with ,

.

The matrix

is upper-triangular (also referred to as right-triangular) since

to find vector

only vector

is used, to find vector

only vectors

are used

-

The above is equivalent to the system

Matrix formulation of Gram-Schmidt (

factorization)

|

|

Given vectors

Initialize ,..,,

;

;

-

For

with linearly independent columns, the Gram-Schmidt algorithm

furnishes a factorization

with

with orthonormal columns and

an upper triangular matrix.

-

Since the column vectors within

were obtained through linear combinations of the column vectors of

we have

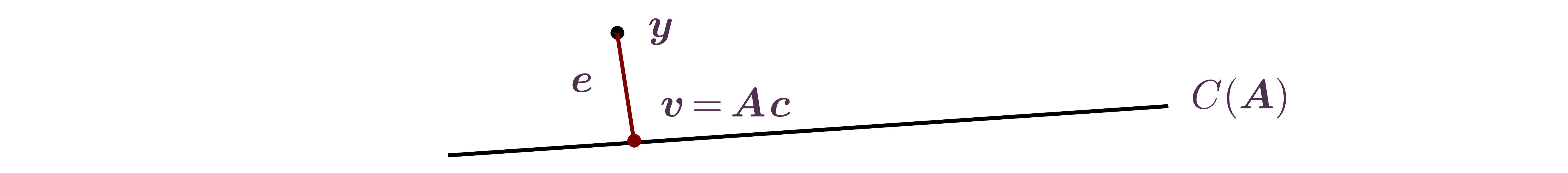

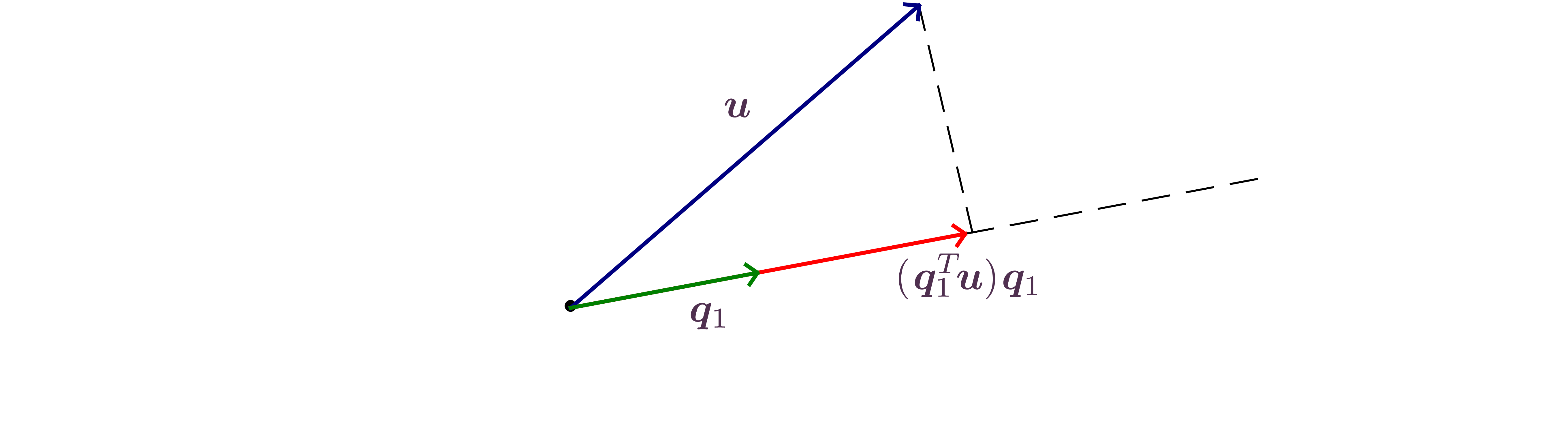

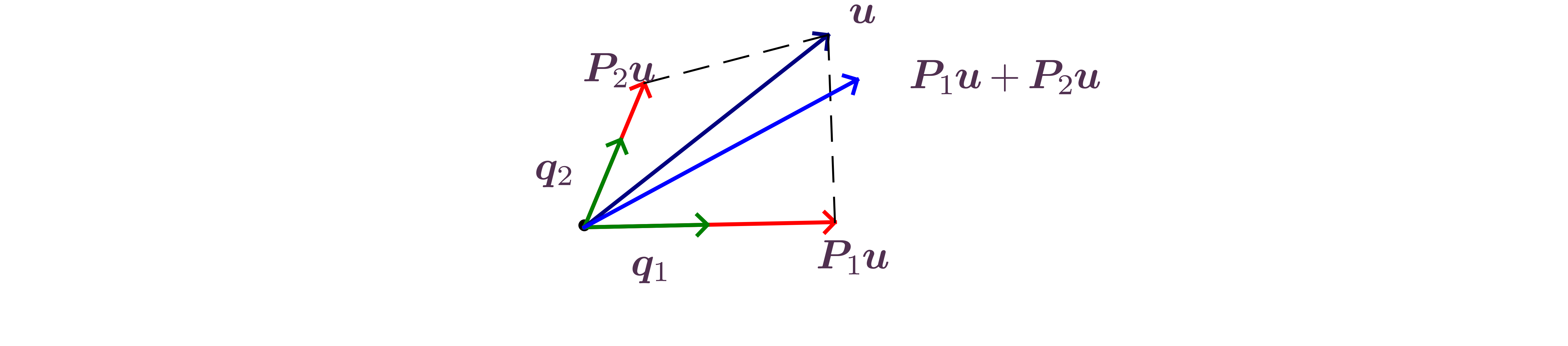

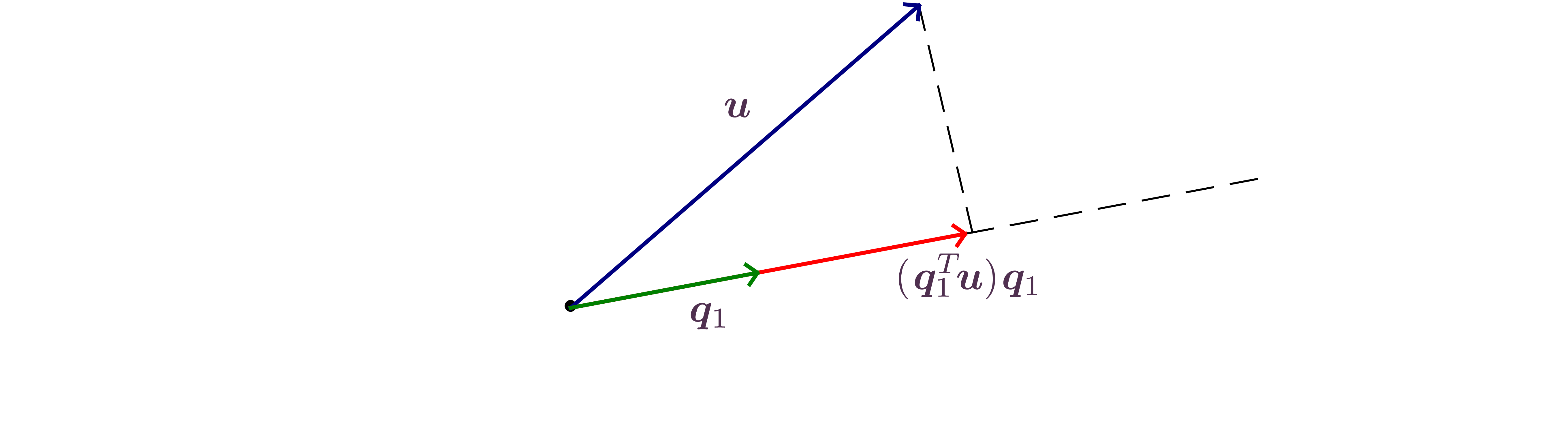

Orthogonal projection of a vector along another vector

|

|

Definition.

The orthogonal

projection of

along direction ,

is the

vector .

-

Scalar-vector multiplication commutativity:

-

Matrix multiplication associativity: ,

with

Definition.

The matrix

is the orthogonal projector along direction ,

.

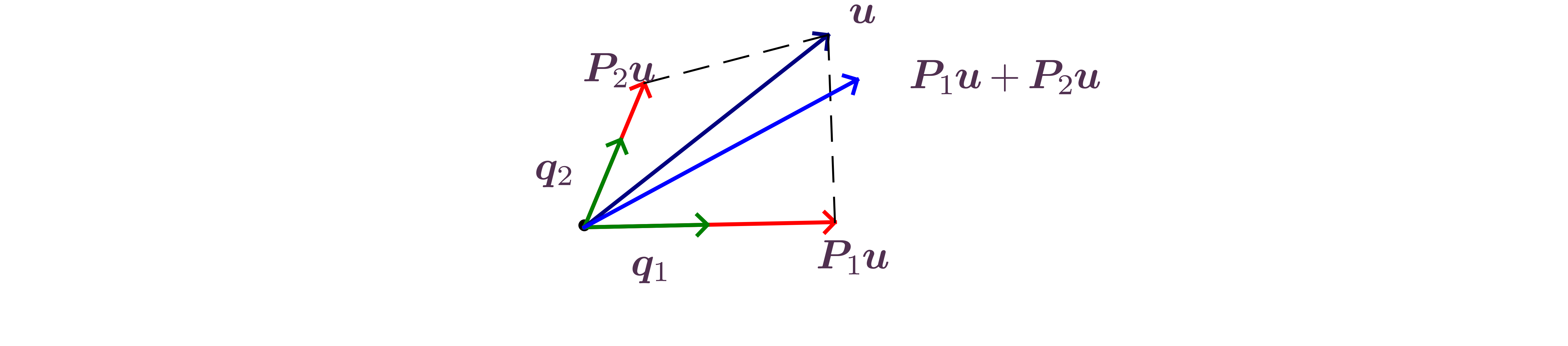

Orthogonal projection onto a subspace

|

|

Definition.

The orthogonal projector

onto ,

with orthonormal column vectors is

Complementary orthogonal projector

|

|

Definition.

The complementary orthogonal

projector to

is ,

where

is a matrix with orthonormal columns.

Orthogonal projectors and linear systems

|

|

-

Consider the linear system

with ,

,

.

Orthogonal projectors and knowledge of the four fundamental matrix

subspaces allows us to succintly express whether there exist no

solutions, a single solution of an infinite number of solutions:

-

Consider the factorization ,

the orthogonal projector ,

and the complementary orthogonal projector

-

If ,

then has

a component outside the column space of , and

has no solution

-

If ,

then

and the system has at least one solution

-

If (null space only

contains the zero vector, i.e., null space of dimension 0) the

system has a unique solution

-

If ,

then a vector

in the null space is written as

and if

is a solution of ,

so is ,

since

The linear system has an -parameter

family of solutions

Least squares: linear regression calculus approach

|

|

-

In many scientific fields the problem of determining the straight

line ,

that best approximate data arises. The problem is to

find the coefficients ,

and this is referred to as the linear regression

problem.

-

The calculus approach: Form sum of squared differences between

and

and seek that minimize by solving the equations

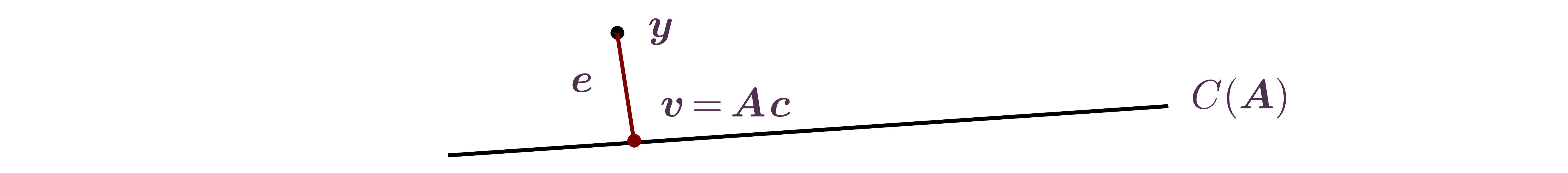

Geometry of linear regression

|

|

-

Form a vector of errors with components .

Recognize that

is a linear combination of

and

with coefficients ,

or in vector form

-

The norm of the error vector

is smallest when

is as close as possible to .

Since

is within the column space of ,

,

the required condition is for

to be orthogonal to the column space