MATH347DS L06: Applying the FTLA (LU)

|

Overview

-

Least squares as an application of projection and factorization

-

Change of coordinates (linear systems), factorization

MATH347DS L06: Applying the FTLA (LU)

|

Least squares as an application of projection and factorization

Change of coordinates (linear systems), factorization

Least squares method and solution by projection

|

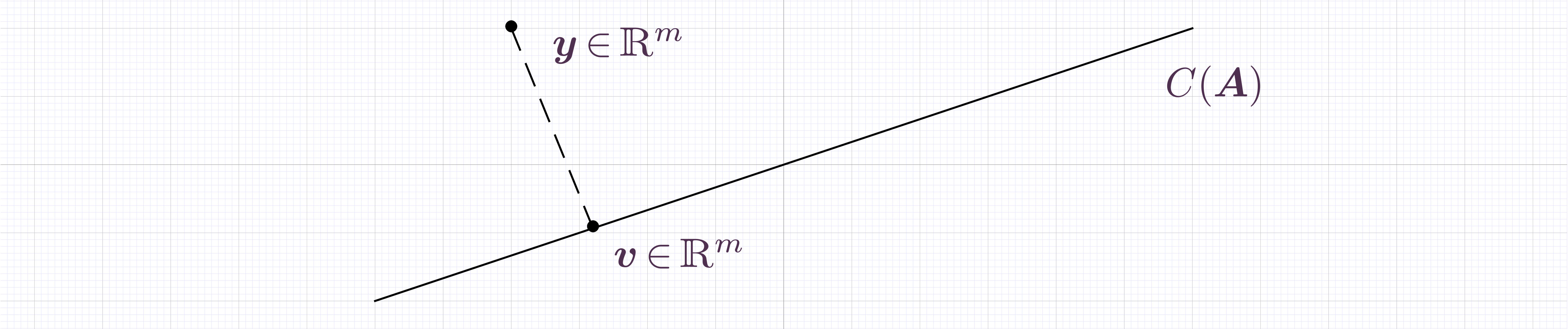

Mathematical statement: solve the minimization problem

Approach: project onto the column space of :

Find an orthonormal basis for column space of by factorization,

State that is the projection of ,

State that is within the column space of ,

Set equal the two expressions of ,

Solve the triangular system to find (in Octave: c=R\(Q'y))

Linear regression coding

|

Generate some data on a line and perturb it by some random quantities

octave> |

m=1000; x=(0:m-1)/m; c0=2; c1=3; yex=c0+c1*x; y=(yex+rand(1,m)-0.5)'; |

Form the matrices, , (qr(A,0) is the Octave gs(A))

octave> |

A=ones(m,2); A(:,2)=x(:); [Q R]=qr(A,0); |

Solve the system

octave> |

c=R\(transpose(Q)*y); |

Form the linear combination closest to

octave> |

v=A*c; |

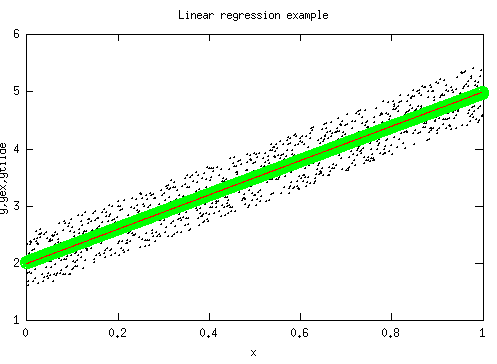

Linear regression example

|

Plot the perturbed data (black dots), the result of the linear regression (green circles), as well as the line used to generate yex (red line)

octave> |

plot(x,y,'.k',x,v,'og',x,yex,'r'); title('Linear regression example'); xlabel('x'); ylabel('y,v,yex'); cd /home/student; print data.eps; |

Quadratic regression

|

The key observation is that the matrix has columns obtained by evaluating the functions at the values . This leads to easy extension to data fitting to higher degree polynomials, for instance a quadratic

octave> |

m=1000; x=(0:m-1)/m; c0=2; c1=3; c2=-5.; yex=c0+c1*x+c2*x.^2; y=(yex+rand(1,m)-0.5)'; |

octave> |

A=ones(m,3); A(:,2)=x(:); A(:,3)=x.^2; [Q R]=qr(A,0) |

octave> |

c=R\(transpose(Q)*y); |

octave> |

v=A*c; disp(c'); |

2.0239 2.8873 -4.8881

octave> |

disp(norm(y-v)/norm(y)/m); |

1.4494e-04

octave> |

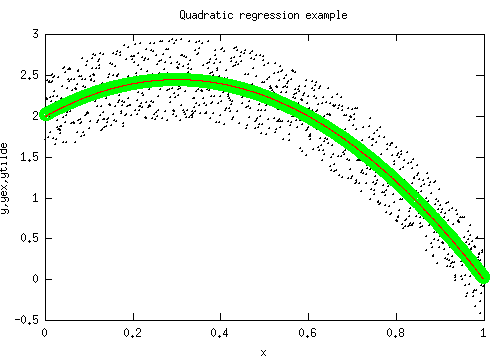

Quadratic regression example

|

Plot the perturbed data (black dots), the result of the quadratic regression (green circles), as well as the parabola used to generate yex (red line)

octave> |

plot(x,y,'.k',x,ytilde,'og',x,yex,'r'); title('Quadratic regression example'); xlabel('x'); ylabel('y,yex,ytilde'); cd /home/student; print data.eps; |

octave> |

The

case: polynomial interpolation

|

Definition. The polynomial interpolant of data with if is a polynomial of degree

that satisfies the conditions , .

We can apply the same approach. In this particular case, the error can be made zero.

octave> |

m=4; x=(0:m-1)'; c0=2; c1=3; c2=-5.; c3=-1; y=c0+c1*x+c2*x.^2+c3*x.^3; |

octave> |

A=ones(m,m); A(:,2)=x(:); A(:,3)=x.^2; A(:,4)=x.^3; [Q R]=qr(A,0); |

octave> |

c=R\(transpose(Q)*y); disp(c'); |

2.00000 3.00000 -5.00000 -1.00000

Note that the coefficients used to generate the data are recovered exactly.

Gaussian elimination

|

Recall the basic operation in row echelon reduction: constructing a linear combination of rows to form zeros beneath the main diagonal, e.g.

This can be stated as a matrix multiplication operation, with

Gauss multiplier

|

Definition. The matrix

with , and the matrix obtained after step of row echelon reduction (or, equivalently, Gaussian elimination) is called a Gaussian multiplier matrix.

Matrix formulation of Gaussian elimination

|

For nonsingular, the successive steps in row echelon reduction (or Gaussian elimination) correspond to successive multiplications on the left by Gaussian multiplier matrices

The inverse of a Gaussian multiplier is

factorization

|

From obtain

Due to the simple form of the matrix is easily obtained as

Solving a linear system by

factorization

|

Using the concept of an factorization, finding the solution to a linear system can be formulated as the following steps:

Find the factorization,

Replace factorization into system and regroup . Solve the lower triangular system to find

Solve the upper triangular system to find