|

A partition of a set is a collection of subsets such that any given element belongs to only one set in the partition. This is modified when applied to subspaces of a vector space, and a partition of a set of vectors is understood as a collection of subsets such that any vector except belongs to only one member of the partition.

Linear mappings between vector spaces can be represented by matrices with columns that are images of the columns of a basis of

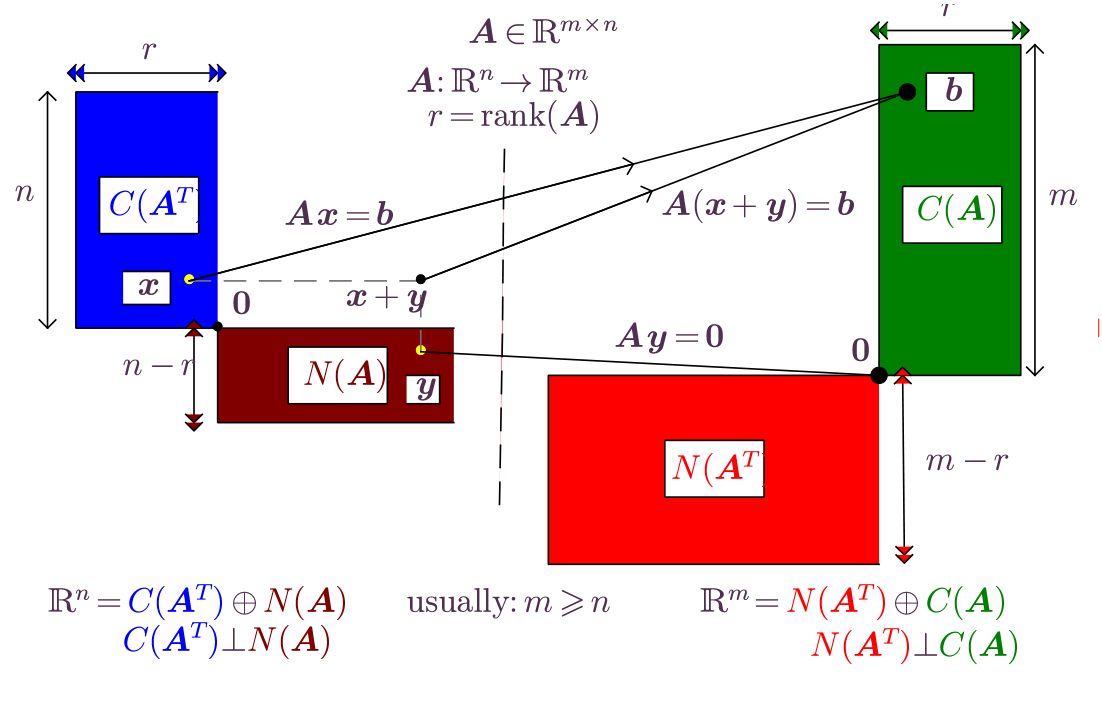

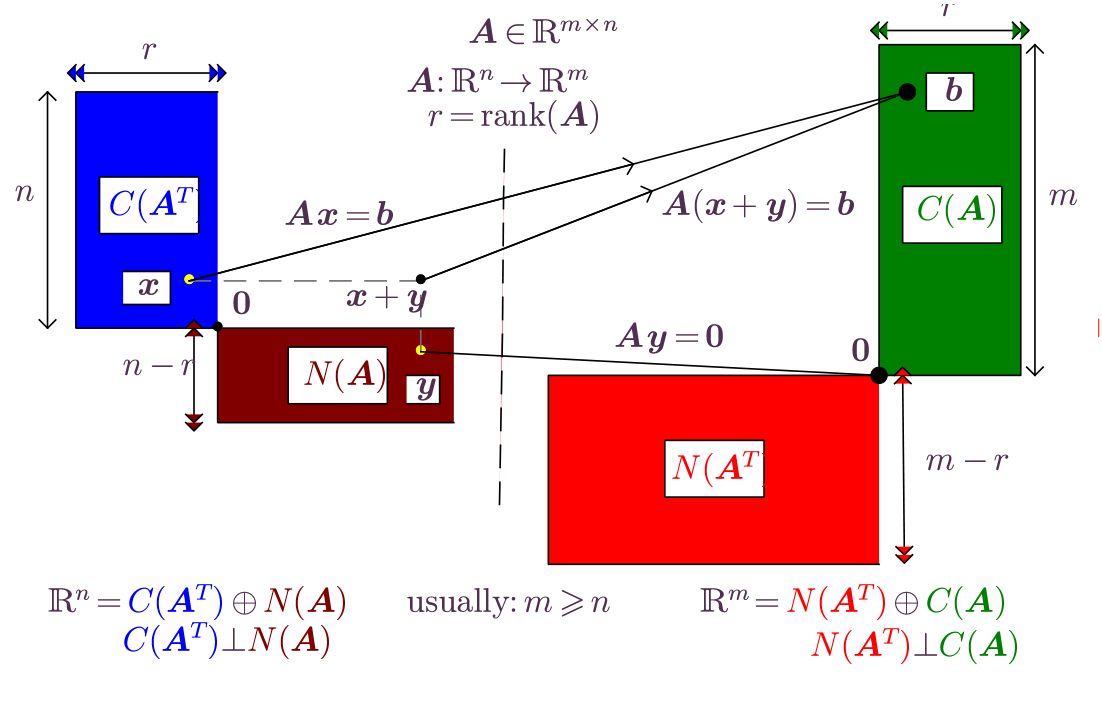

Consider the case of real finite-dimensional domain and co-domain, , in which case ,

The column space of is a vector subspace of the codomain, , but according to the definition of dimension if there remain non-zero vectors within the codomain that are outside the range of ,

All of the non-zero vectors in , namely the set of vectors orthogonal to all columns in fall into this category. The above considerations can be stated as

The question that arises is whether there remain any non-zero vectors in the codomain that are not part of or . The fundamental theorem of linear algebra states that there no such vectors, that is the orthogonal complement of , and their direct sum covers the entire codomain .

, and

.

Proof. by definition of direct sum, sum of vector subspaces. To prove that , consider . Since and write

and since expression is unique, it results that . Now assume (i),(ii) and establish an unique decomposition. Assume there might be two decompositions of , , , with , Obtain , or . Since and it results that , and , , i.e., the decomposition is unique.

In the vector space the subspaces are said to be orthogonal complements is , and . When , the orthogonal complement of is denoted as , .

, the direct sum of the column space and left null space is the codomain of the mapping

, the direct sum of the row space and null space is the domain of the mapping

and , the column space is orthogonal to the left null space, and they are orthogonal complements of one another,

and , the row space is orthogonal to the null space, and they are orthogonal complements of one another,

|

Consideration of equality between sets arises in proving the above theorem. A standard technique to show set equality , is by double inclusion, . This is shown for the statements giving the decomposition of the codomain . A similar approach can be used to decomposition of .

(column space is orthogonal to left null space).

Proof. Consider arbitrary . By definition of , such that , and by definition of , . Compute , hence for arbitrary , and .

( is the only vector both in and ).

Proof. (By contradiction, reductio ad absurdum). Assume there might be and and . Since , such that . Since , . Note that since , contradicting assumptions. Multiply equality on left by ,

thereby obtaining , using norm property 3. Contradiction.

Proof. (iii) and (iv) have established that are orthogonal complements

By Lemma 1 it results that .

The remainder of the FTLA is established by considering , e.g., since it has been established in (v) that , replacing yields , etc.

The FTLA asserts that the framework of linear algebra is complete, we can answer any question about linear combinations. What are the types of questions that are asked? These are known as the main problems of linear algebra.

Least squares problem. Given the components of vector in the standard basis vectors, find the linear combination of the vectors that is “as close as possible' to . Assess discrepancy between and a linear combination through the two-norm

Solving a linear system. Given the components of vector in the standard basis vectors, what are the coordinates with respect to another set of vectors ?

with a matrix with column vectors . Very often the matrix is square .

Eigenproblem. Given a square matrix are there linear combinations that leave the direction of a matrix-vector product unchanged?

Note that the problem is now specified to allow complex components of . The linear algebra framework developed for vectors in carries over with minimal modification to , and the eigenproblem requires consideration of complex values.