Eigenproblems

Synopsis. The simple question of what directions

remain unchanged by a linear mapping leads to widely useful concepts. In

particular, constructive algorithms to obtain the orthonormal bases for

the fundamental matrix subspaces predicted by the singular value

decomposition are obtained. Several additional matrix factorizations

arise and offer insight into linear mappings.

1.Determinants

Linear mappings of a vector space onto itself

are characterized by a square matrix

whose column vectors can be considered as the edges of a geometric

object in .

When a

parallelogram is obtained with area ,

with the length of the base edge and

the height. The parallelogram edges are

specified by the vectors

oriented at angles

with respect to the . With ,

the parallelogram height is

The trigonometric functions can be related to the edge vectors through

The above allow statement of a formula for the parallelogram area

strictly in terms of edge vector components

By the formula can be either positive

of negative, and indicates the order of denoting edge vectors since

the parallelogram can be specified either as edges or .

|

|

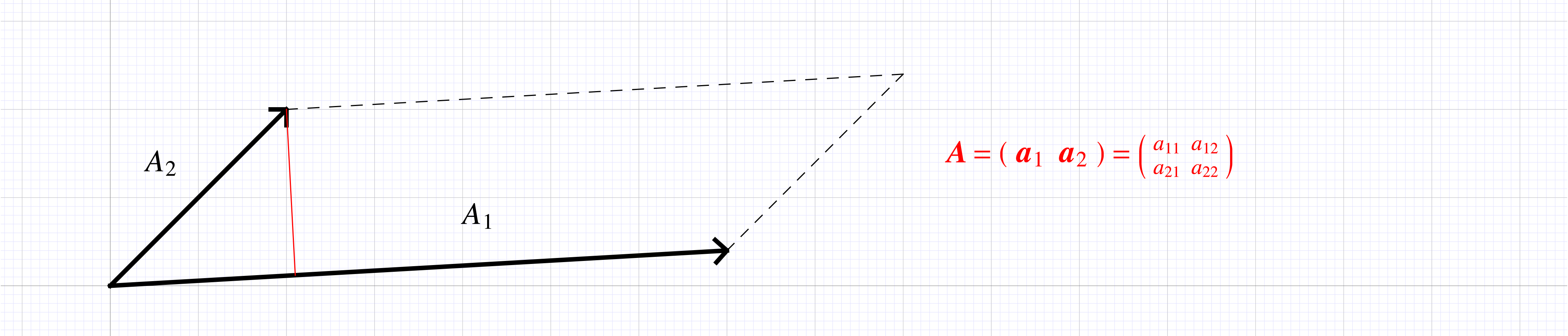

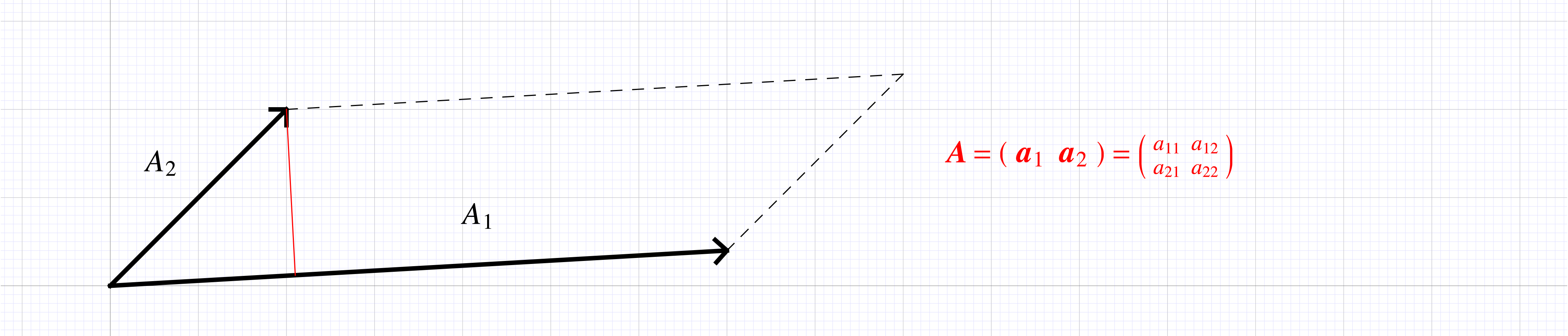

Figure 1. Area of a parallelogram

(hyperparallelipiped in two-dimensions) with edge vectors

.

|

Recall that the norm of a vector

is a functional giving the magnitude of a vector. The norm

of a matrix

is also a functional specifying the maximum amplification of vector

norm through the linear mapping ,

as encountered in the SVD singular values. The above calculations

suggest yet another functional, the signed area of the geometric

object with edge vectors .

This functional is a mapping from the vector space of

matrices to the reals and is known as the

determinant of a matrix.

Definition. The determinant of a square

matrix

is a real number giving the (oriented) volume of the parallelipiped

spanned by matrix column vectors.

The geometric interpretation of a determinant leads to algebraic

computation rules. For example, consider the parallelogram with

colinear edge vectors, .

In this case the parallelogram area is zero. In general whenever one

of the vectors within is linearly dependent on the others

the dimension of is less than ,

and the volume of the associated parallelipiped is zero. Deduce that

|

(1) |

Swapping a pair of edges changes the orientation of the edge vectors

and leads to a sign change of the determinant. Another swap changes

the sign again. A sign , the parity of a

permutation, is associated to any permutation

where is the number of pair swaps

needed to carry out the permutation. It

results that applying a permutation matrix onto

changes the determinant sign

|

(2) |

Recall that

permutes rows and

permutes columns. The edges of a geometric object could be specified

in either column format or row format with no change in the geometric

properties leading to

Scaling the length of an edge changes the volume in accordance with

|

(4) |

With reference to Fig. (1), consider edge

decomposed into two parts along the same direction .

The area of the parallelogram with edge

is the sum of those with edges .

This generalizes to arbitrary decompositions of the

vector, leading to the rule

|

(5) |

The above two rules (4,5) state that the

determinant is a linear mapping in the first column. In conjunction

with column permutation, deduce that the determinant is linear in any

column or row of the matrix .

Consider now an important consequence, the result upon the determinant

of adding a multiple of one column to another column

Since the second term in the above sum has two identical columns its

value is zero and

stating that adding a multiple of a column to another does not change

the value of a determinant. Similarly, adding a multiple of a row to

another does not change the value of a determinant. The practical

importance of the rules is that the elementary operations from

Gaussian elimination do not change the value of a determinant.

The determinant of the identity matrix equals one, ,

and that of a matrix product is the product of determinants of the

factors

The determinant of a diagonal matrix is the product of its diagonal

components

The properties can be used in conjunction with known matrix

factorizations.

-

Determinant of an orthogonal

matrix is .

Since

-

Determinant of a matrix equals the product of its singular values

In particular if , .

The determinant can be defined either in geometric terms as above or

in algebraic terms. The ageneral algebraic definition is specified in

terms of the components of the matrix as

with the sum carried out over all permutations of

numbers, one of which is specified as

There are such

permuations, a number that grows rapidly with

hence the algebraic definition holds little interest for practical

computation, though it was the object of considerable historical

interest through Cramer's rule to solve linear systems.

2.Eigenvalues and the characteristic

polynomial

The eigenproblem is to find invariant directions of a linear mapping

,

meaning those non-zero input vectors that lead to an output in the

same direction perhaps scaled by factor

The above can be restated as

The above is obviously satisfied for ,

but this case is excluded as a solution to the eigenproblem since the

zero vector does not uniquely specify a direction in .

For there to be a non-zero solution to the eigenproblem the null space

of

must be of dimension at least one. It results that the matrix

is not of full rank, often stated as

is singular, and at least one of the singular values of

must be zero. Through the determinant properties it results that

The algebraic definition of a determinant leads to

such that is a polynomial of degree in . It is

known through the fundamental theorem of algebra that a polynomial of

degree has

roots ,

with some perhaps repeated

Note that in general the roots can be complex even for polynomials

with real coefficients as exemplified by .

If

are the distinct roots, each repeated

times

The number of times a root is repeated is called the algebraic

multiplicty of an eigenvalue.

For each eigenvalue

has a non-trivial null space called the eigenspace of

The eigenvector associated with an eigenvalue is a member of this

eigenspace

Note that eigenvectors can be scaled by any non-zero number since

In practice, it is customary to enforce .

The dimension of the eigenspace is called the geometric multiplicity of

eigenvalue

The conclusion from the above is that

solutions of the eigenproblem exist. There are

eigenvalues, not necessarily distinct, and associated eigenvectors. As

usual instead of the individual statements

it is more efficient to group eigenvalues and eigenvectors into matrices

and state the eigenproblem in matrix terms as

3.Eigendecomposition

3.1.Simple cases

Insight into eigenproblem solution is gained by considering common

geometric transformations.

Projection matrix. The matrix

projects a vector onto . Vectors within are

unaffected

hence is an eigenvector with

associated eigenvalue .

The matrix is of rank one and by the

FTLA, .

In the SVD of ,

It results that any vector within the

left null space

is an eigenvector with associated eigenvalue .

In matrix form the eigenproblem solution is

and since is orthogonal ,

multiplication no the left by

is possible leading to

Reflection matrix. The matrix

is the reflector across . Vectors

colinear with do not change

orientation

and are therefore eigenvectors with associated eigenvalue .

Vectors orthogonal to

change orientation along their direction and are hence eigenvectors with

associated eigenvalue

In there are

different vectors orthogonal to and

mutually orthonormal. The eigenproblem solution can again be stated in

matrix form as

Since is an orthogonal matrix, it

results that

Rotation matrix. The matrix

represents the isometric rotation of two-dimensional vectors. If ,

with eigenvalues ,

and eigenvector matrix .

For ,

the eigenvalues are ,

again with eigenvector matrix .

If ,

the orientation of any non-zero

changes upon rotation by . The

characteristic polynomial has complex roots

and the directions of invariant orientation have complex components (are

outside the real plane )

3.2.Vectors and matrices with complex

components

The above rotation case is an example of complex values arising in the

solution of an eigenproblem for a matrix with real coefficients.

Fortunately, the framework for working with vectors and matrices that

have complex components is almost identical to working in the reals. The

only significant difference is the realtionship between the two-norm and

inner product. When

the inner product

corresponds to the dot product and the two-norm of a vector can be

defined as

Consider a complex number ,

taken to represent a point at coordinates in

the plane. The

magnitude or absolute value of is defined

as the distance from the origin to point in

Introducing the complex conjugate

(the reflection of across the -axis),

the absolute value can also be stated as

Extending this idea to vectors of complex numbers, transposition is

combined with taking the conjugate into an operation of taking the

adjoint denoted by an superscript

Everywhere a transposition appears when dealing with vectors in , it is replaced by

taking the adjoint when working with vectors in .

Most notably when

it is said to be orthogonal if

For

the matrix is said to be unitary if

Orthogonality of

is expressed as .

The FTLA is restated as

and the SVD as

3.3.General solution of the eigenproblem

As exemplified above, solving an eigenproblem

requires:

-

Finding the roots of

the characteristic polynomial ;

-

Finding bases for the eigenspaces .

The matrix form of the solution is then stated as

If the matrix has linearly independent

columns (it is non-singular), it can then be inverted leading to the

eigendecomposition

yet another factorization of the matrix .

Such eigendecompositions are very useful when repeatedly applying a

linear mapping since .

In general after applications of the matrix

arises. If an eigendecomposition is avaiable, then

and is easily

computable

The simple eigenproblem for

reveals issues that might arise in more general situations. The

characteristic polynomial

has a repeated root .

Note that

is already in row echelon form indicating that . The FTLA then

states the

and the matrix form of the eigenproblem solution is

Note that the second column of is the

same as the first, hence has linearly

dependent columns and cannot be inverted. The matrix

has an eigenproblem solution but does not have an eigendecomposition. An

eigendecomposition is possible only when for each eigenvalue its

algebraic multiplicty is equal to its geometric multiplicity.

An immediate question is to identify those matrices for which an

eigendecomposition is possible and perhaps of a particularly simple

form. A matrix

is said to be normal if

A normal matrix has a unitary eigendecomposition. There exists

unitary that satisfies

In the real case the above becomes ,

and there exists

orthogonal that satisfies

Computational procedures to solve an eigenproblem become quite

complicated for the general case and have been the object of extensive

research. Fortunately the problem is well understood and solution

procedures have been implemented in all major computational systems. In

Julia for example:

∴ |

A=[1 0; 0 2]; eigvals(A) |

∴ |

A=[1 1; 0 1]; eigvals(A) |

|

(9) |

4.Finding the SVD

An important application of eigendecompositions is actual computation of

the SVD. The SVD theorem simply asserted existence of intrinsic

orthogonal basis for the domain and codomain of a linear mapping in

which the mapping behaved as a simple scaling. It did not provide a

procedure to find those bases in general.

From the above, though an eigendecomposition for general

may not exist, an orthogonal decomposition always exists for

since

verifies that is normal. Consider now

the SVD

to find that

stating that the left singular vectors

are the eigenvectors of .

Likewise

is a normal matrix and from

the right singular vectors are found as the eigenvectors of .

The singular values of are the square

roots of the eigenvalues of either or

.

The two eigenproblems above are solved whenever a computation of the SVD

is invoked within Julia.