Definition.

The Dirac delta symbol

is defined as

Definition.

A set of vectors is said to be orthonormal

if

Given vectors

Initialize ,..,,

;

;

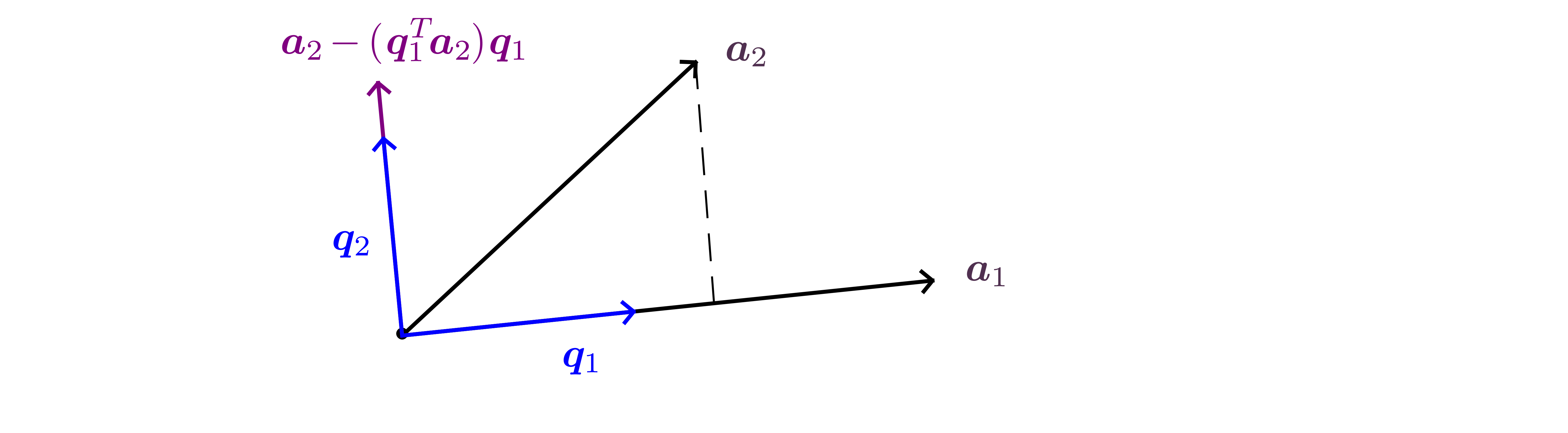

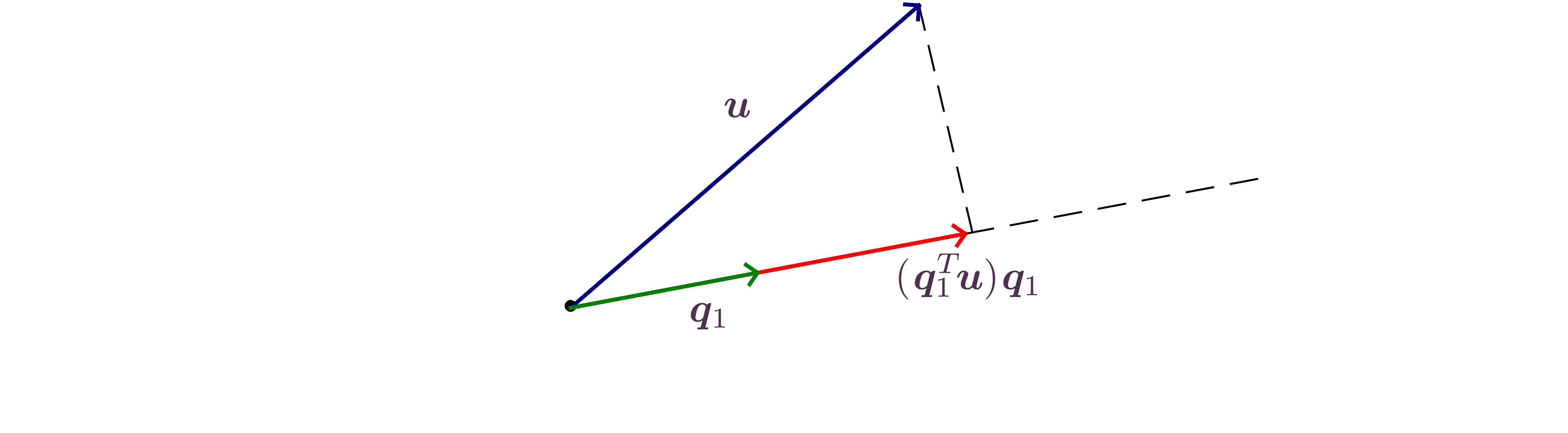

Orthogonal projection of a vector along another vector

|

|

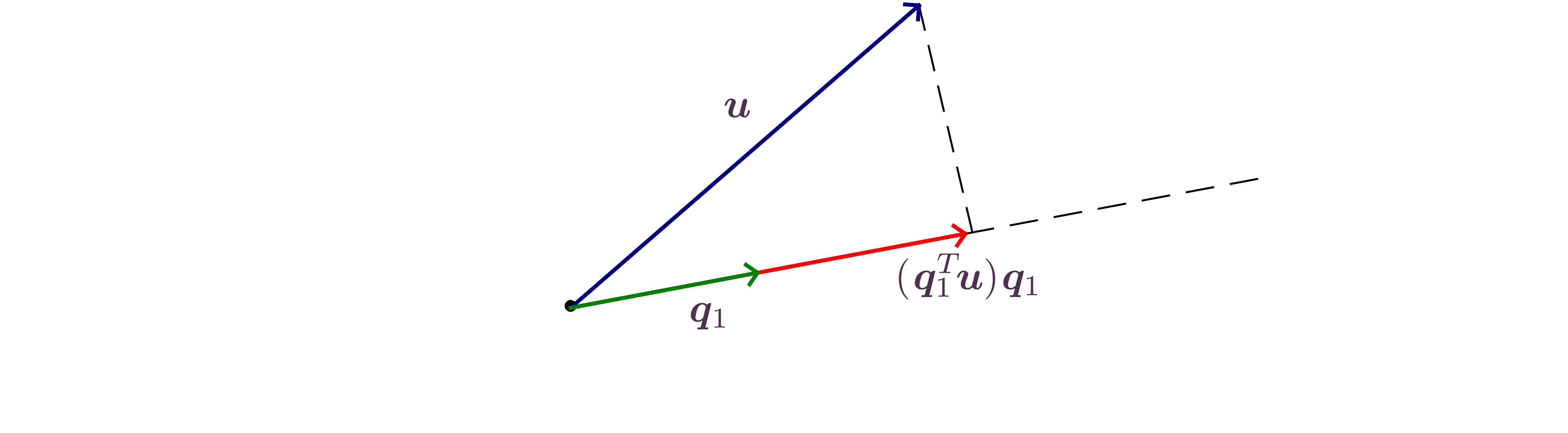

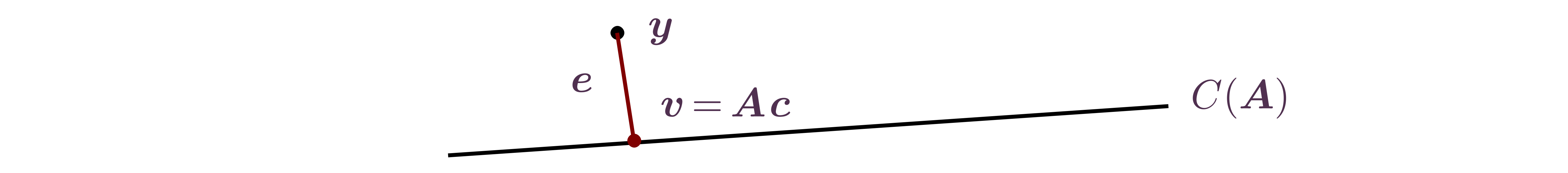

Definition.

The orthogonal

projection of

along direction ,

is the

vector .

-

Scalar-vector multiplication commutativity:

-

Matrix multiplication associativity: ,

with

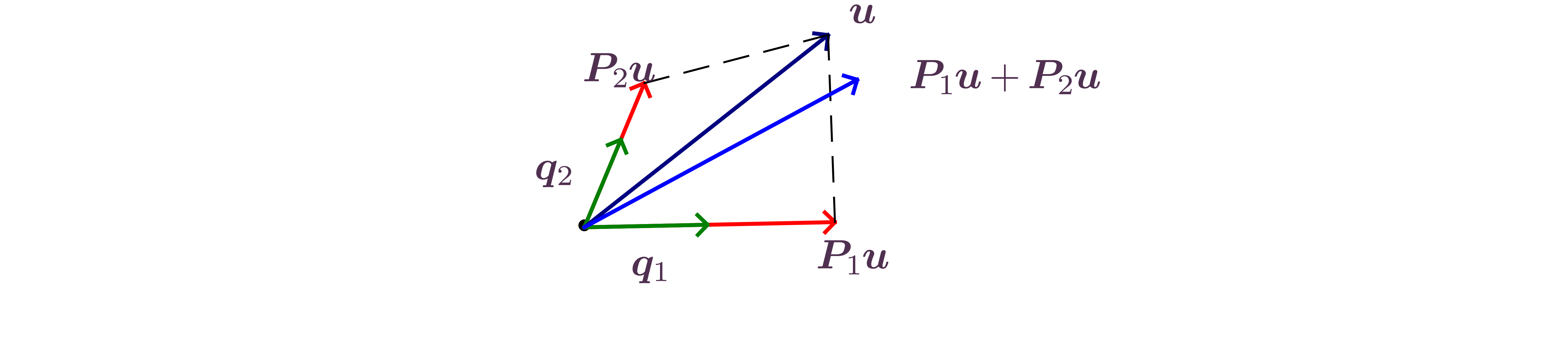

Definition.

The matrix

is the orthogonal projector along direction ,

.

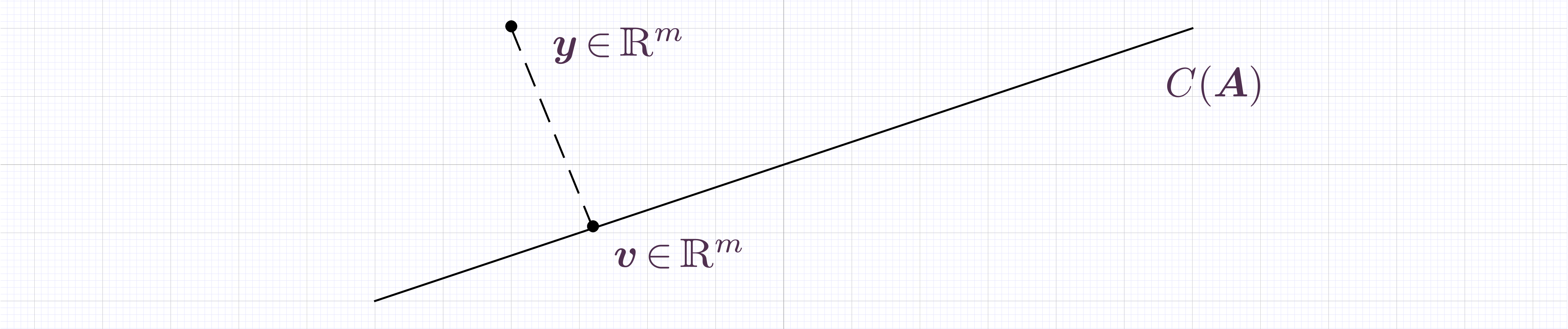

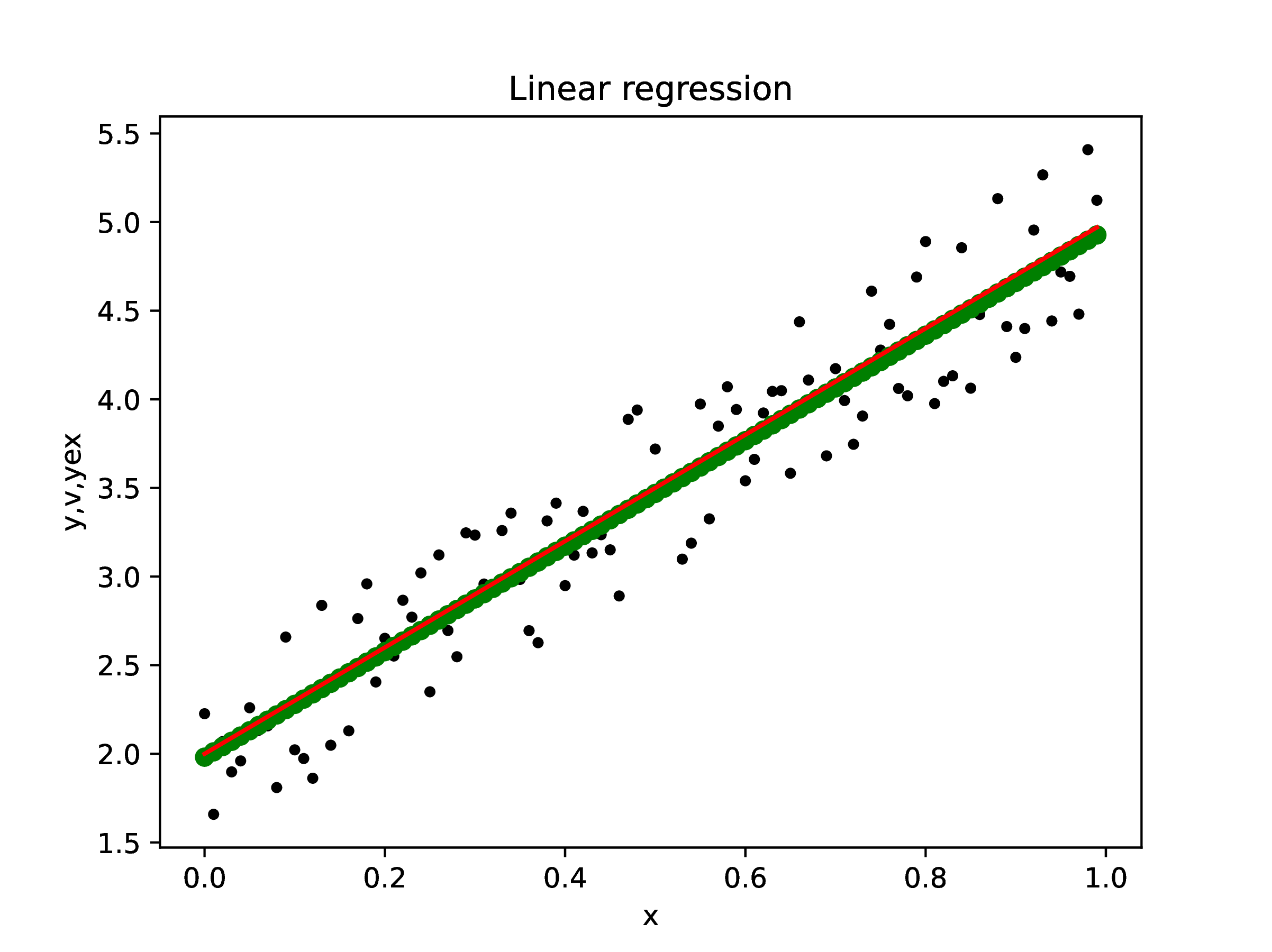

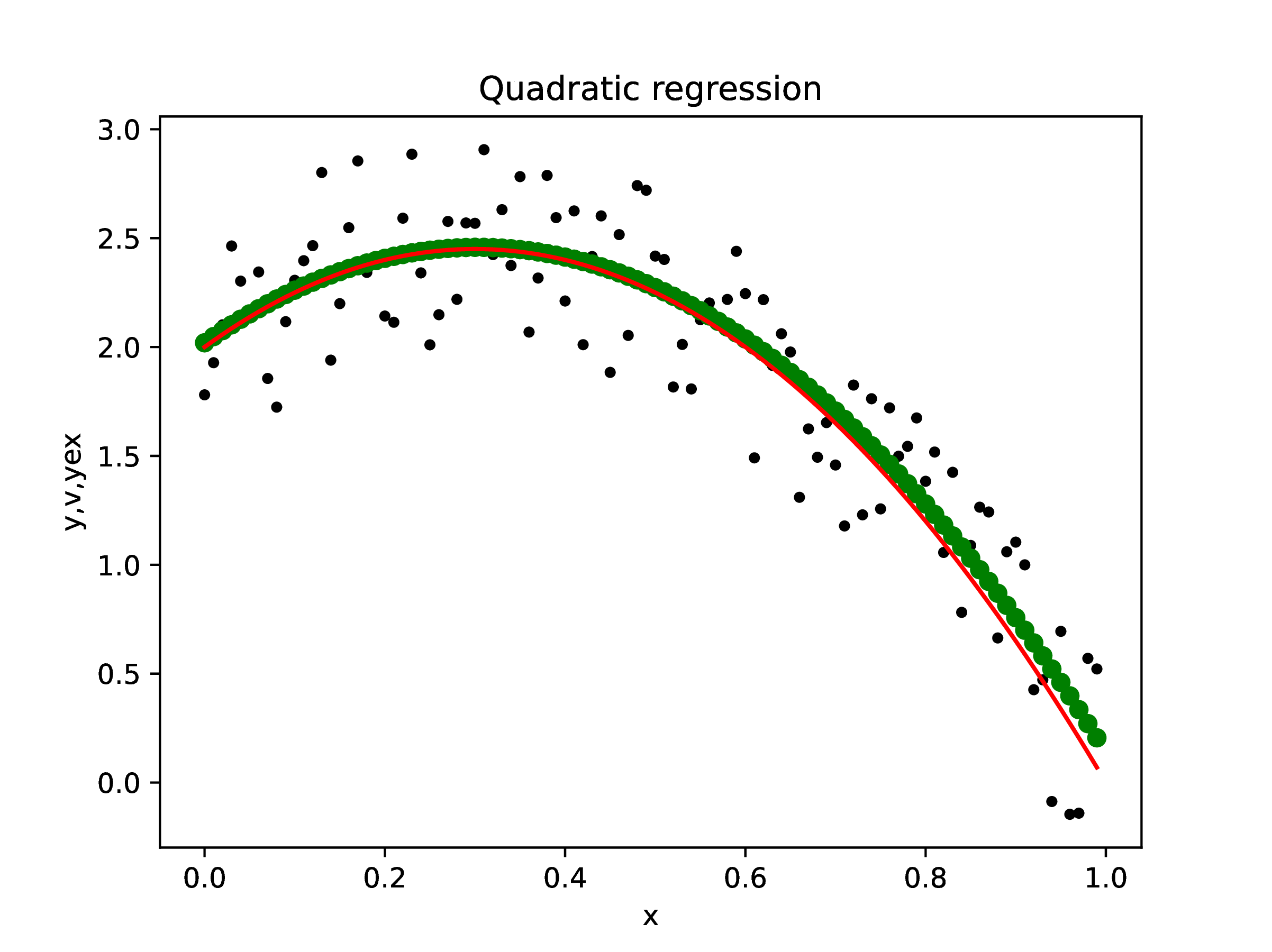

The

case: polynomial interpolation

|

|

Definition.

The polynomial

interpolant of data with

if

is a polynomial of degree

that satisfies the conditions ,

.

∴ |

m=3; x=(0:m-1)./m; c0=2; c1=3; c2=-5; yex=c0.+c1*x.+c2*x.^2; |

∴ |

A=ones(m,3); A[:,2]=x[:]; A[:,3]=x[:].^2; QR=qr(A); Q=QR.Q[:,1:3]; R=QR.R[1:3,1:3]; |

∴ |

c = R\(transpose(Q)*yex) |

|

(3) |

Note that the coefficients used to generate the data are recovered

exactly.