MATH347 L28: Course review

|

-

What is linear algebra and why is to so important to so many applications?

-

Basic operations: defined to express linear combination

-

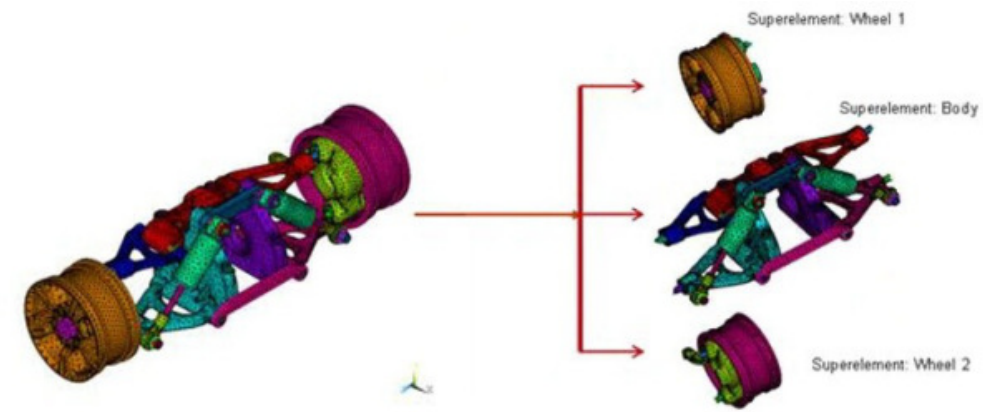

Linear operators, Fundamental Theorem of Linear Algebra (FTLA)

-

Factorizations: more convenient expression of linear combination

-

Solving linear systems (change of basis)

-

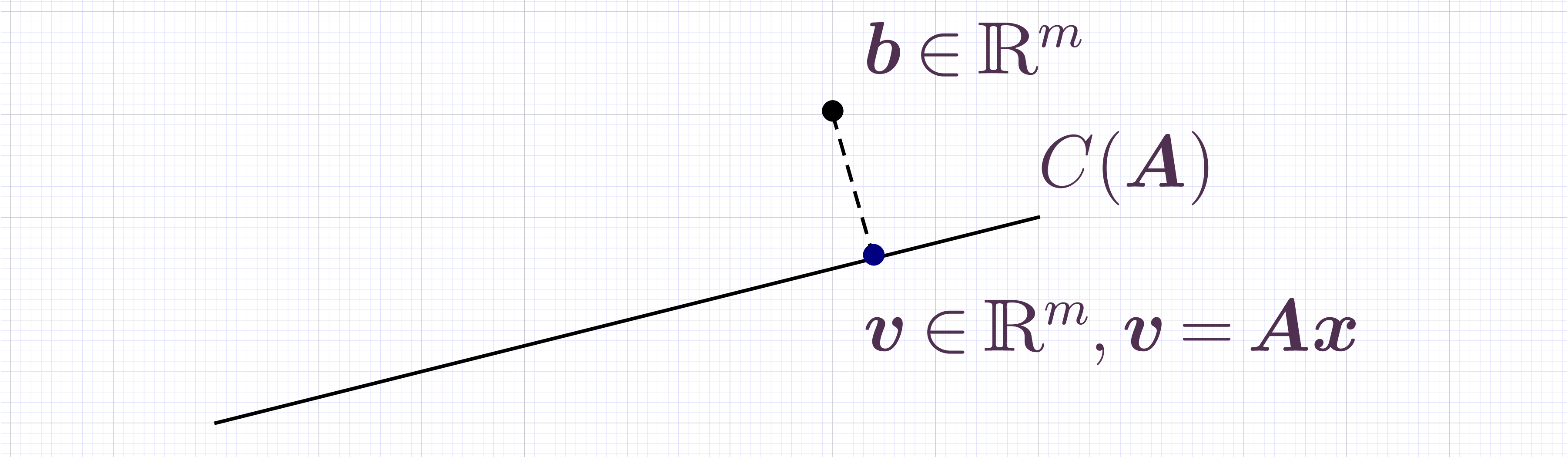

Best 2-norm approximation: least squares

-

Exposing the structure of a linear operator between the same sets through eigendecomposition

-

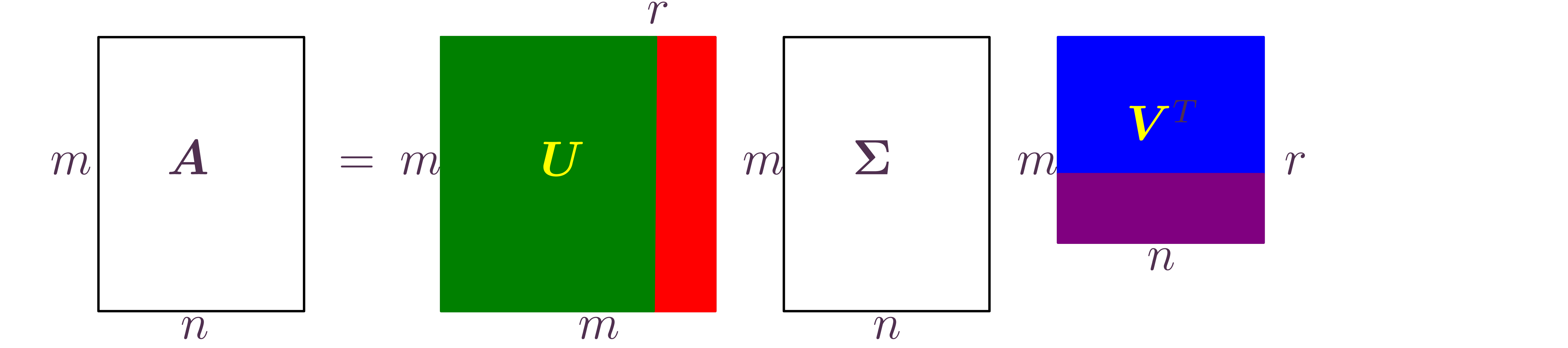

Exposing the structure of a linear operator between different sets through the SVD

-

Applications: any type of correlation