MATH566 Lesson 21: Romberg quadrature

|

Overview

-

Sequence convergence acceleration

-

Aitken acceleration

-

Composite trapezoid + Aitken acceleration = Romberg quadrature

MATH566 Lesson 21: Romberg quadrature

|

Sequence convergence acceleration

Aitken acceleration

Composite trapezoid + Aitken acceleration = Romberg quadrature

Sequence convergence acceleration

|

Recall definitions that characterize sequence convergence

Definition. converges to with rate and order if

| (1) |

If is known, it can be used to construct a faster converging sequence

Write , and take ratio

| (2) |

If are known, then the above can be solved for

The solution is taken as the term in a faster converging sequence

Since it is based upon assumed behavior, this is called an extrapolation, understood as “going outside realm of known data”

Other extrapolation example: Let interpolate data . for is interpolation, for is an extrapolation.

Aitken acceleration

|

Extrapolation for is known as Aitken acceleration

Obtain

Set , rewritten as a correction to the term

Example: L21.jl shows acceleration of forward finite difference approximation

Composite trapezoid

|

Consider problem of computing by composite trapezoid with uniform partition of interval , ,

Introduce notation to indicate that first, last terms in sum are halved

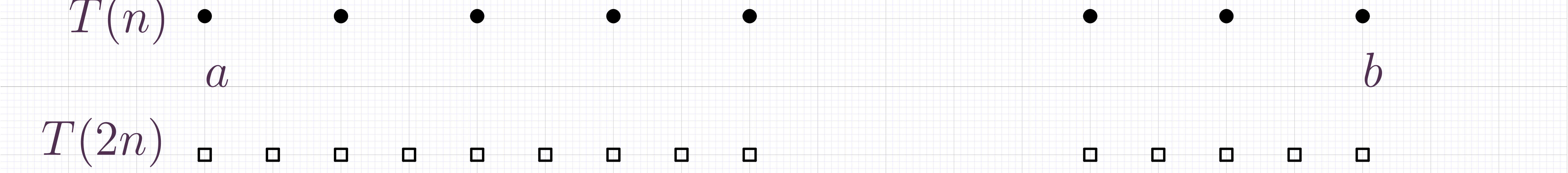

Recall: computational complexity of numerical quadrature given by number evaluations. Consider ,, and let

Many common points: exploit this to reduce evaluations

Recursive composite trapezoid formula

|

Consider to have been computed, e.g.,

Let , express in terms of previously computed

The above allows construction of sequence , .

Recall composite trapezoid error formula

of second-order accuracy

Romberg integration

|

Idea #1: apply extrapolation acceleration to sequence

Take , which should now be faster-convergent, (third-order) sequence

Idea #2: repeatedly apply extrapolation acceleration to sequence

Introduce , = number of subintervals, = number of times extrapolation acceleration has been applied

Romberg relation

Romberg table

|

Organize computations as a table

Choose high enough to ensure resolution, i.e., function variation is captured

Choose moderate , , to avoid loss of precision due to floating point subtraction