|

||

The objective of scientific computation is to solve some problem by constructing a sequence of approximations . The condition suggested by mathematical analysis would be , with . As already seen in the Leibniz series approximation of , acceptable accuracy might only be obtained for large . Since could be an arbitrarily complex mathematical object, such slowly converging approximating sequences are of little practical interest. Scientific computing seeks approximations of the solution with rapidly decreasing error. This change of viewpoint with respect to analysis is embodied in the concepts of rate and order of convergence.

| (1) |

As previously discussed, the above definition is of limited utility since:

The solution is unknown;

The limit is impractical to attain.

Sequences converge faster for higher order or lower rate . A more useful approach is to determine estimates of the rate and order of convergence over some range of iterations that are sufficiently accurate. Rewriting (1) as

suggests introducing the distance between successive iterates , and considering the condition

| (2) |

with , , denotes machine epsilon.

As an example, consider the derivative of at , as given by the calculus definition

and construct a sequence of approximations

Start with a numerical experiment, and compute the sequence .

∴ |

N=24; n=1:N; h=2.0.^(-n); f(x) = exp(x)-1; x0=0; f0=f(x0); |

∴ |

g = (f.(h).-f0) ./ h; d=abs.(g[2:N]-g[1:N-1]); |

∴ |

n1=2; n2=8; [h[n1:n2] g[n1:n2] d[n1:n2]] |

(3)

∴ |

Investigation of the numerical results indicates increasing accuracy in the estimate of , with decreasing step size . The distance between successive approximation sequence terms also decreases. It is more intuitive to analyze convergence behavior through a plot rather than a numerical table.

∴ |

clf(); plot(h[2:N],d,"-o"); xlabel("h"); ylabel("d"); |

∴ |

cd(homedir()*"//courses//MATH661//images"); savefig("L02Fig01a.eps"); |

∴ |

The intent of the rate and order of approximation definitions is to state that the distance between successive terms behaves as

in the hope that this is a Cauchy sequence, and successively closer terms actually indicate convergence. The convergence parameters can be isolated by taking logarithms, leading to a linear dependence

Subtraction of successive terms gives , leading to an average slope estimate

∴ |

c=log.(2,d); lh=log.(2,h[2:N]); clf(); plot(lh,c,"-o"); plot([-10,-20],[-10,-20],"k"); plot([-10,-20],[-10,-30],"g"); |

∴ |

xlabel("log(h)"); ylabel("log(d)"); savefig("L02Fig01b.eps"); |

∴ |

num=c[3:N-1]-c[2:N-2]; den=c[2:N-2]-c[1:N-3]; |

∴ |

q = sum(num ./ den)/(N-3) |

∴ |

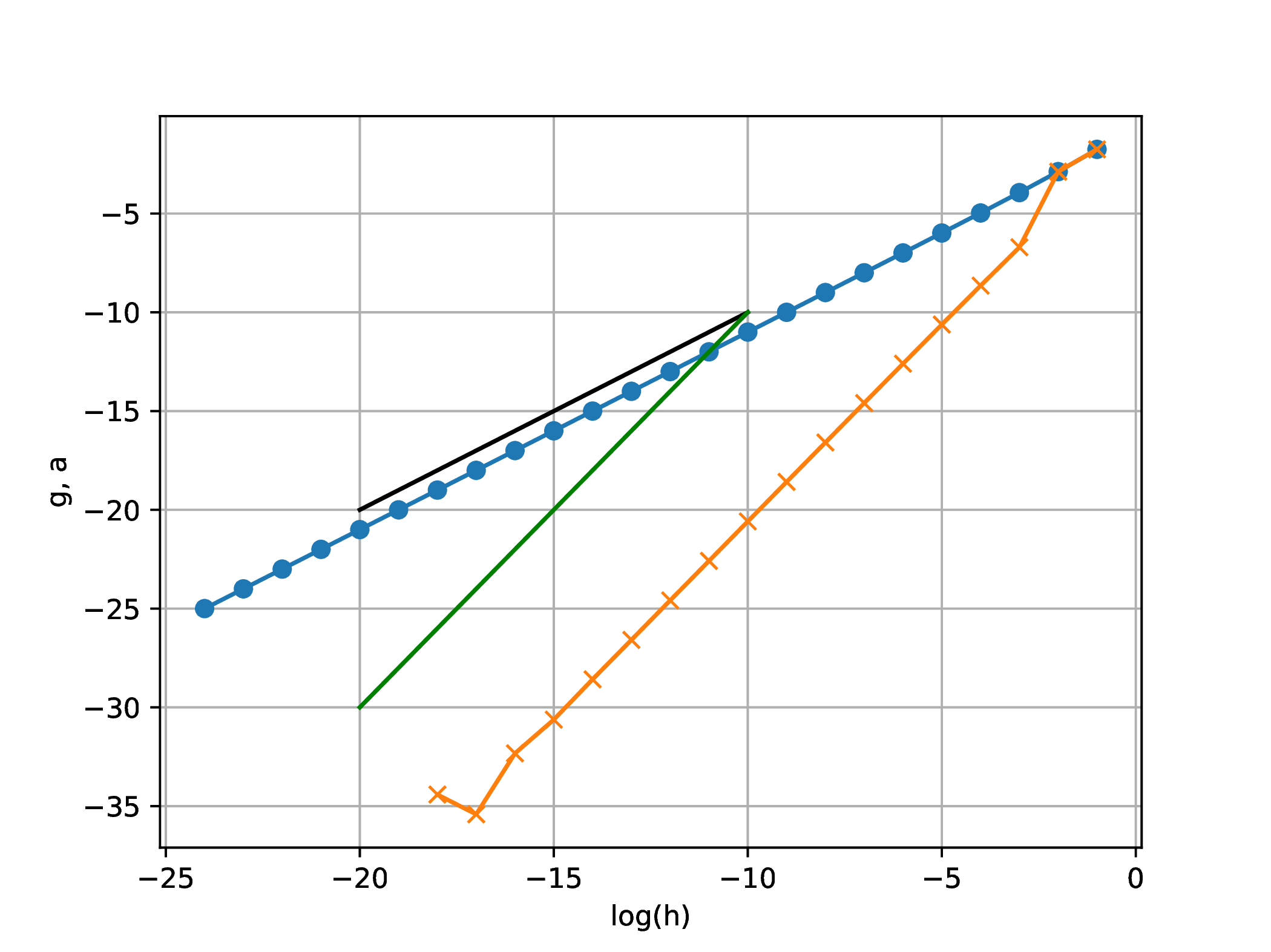

The above computations indicate , known as linear convergence. Figure 1b shows the common practice of depicting guide lines of slope 1 (black) and slope 2 (green) to visually ascertain the rate of convergence. Once the order of approximation is determined, the rate of aproximation is estimated from

∴ |

s=exp(sum(c[2:N-1]-q*c[1:N-2])/(N-2)) |

∴ |

The above results suggest successive approximants become closer spaced according to

Repeat the above experiment at , where , and using a different approximation of the derivative

For this experiment, in addition to the rate and order of approximation , also determine the rate and order of convergence using

∴ |

N=32; n=1:N; h=2.0.^(-n); f(x) = exp(x)-1; x0=log(2); f0=f(x0); g0=exp(x0); |

∴ |

g = (f.(x0 .+ h).-f.(x0 .- h)) ./ (2*h); d=abs.(g[2:N]-g[1:N-1]); |

∴ |

c=log.(2,d); lh=log.(2,h[2:N]); b=abs.(g[2:N].-g0); a=log.(2,b); |

∴ |

plot(lh,c,"-o"); plot(lh,a,"-x"); plot([-10,-20],[-10,-20],"k"); plot([-10,-20],[-10,-30],"g"); |

∴ |

xlabel("log(h)"); ylabel("c, a"); grid("on"); |

∴ |

savefig("L02Fig02.eps"); |

∴ |

Given some approximation sequence , , with solution of problem , it is of interest to construct a more rapidly convergent sequence , . Knowledge of the order of convergence can be used to achieve this purpose by writing

| (4) |

and taking the ratio to obtain

| (5) |

For , the above is a polynomial equation of degree that can be solved to obtain . The heuristic approximation (4) suggests a new approximation of the exact limit obtained by solving (5).

One of the widely used acceleration techniques was published by Aitken (1926, but had been in use since Medieval times) for in which case (5) gives

The above suggests that starting from , the sequence with

might converge faster towards the limit. Investigate by revisiting the numerical experiment on approximation of the derivative of at , using

∴ |

N=24; n=1:N; h=2.0.^(-n); f(x) = exp(x)-1; x0=0; f0=f(x0); |

∴ |

g = (f.(h).-f0) ./ h; a = copy(g); |

∴ |

a[3:N] = g[3:N] - (g[3:N]-g[2:N-1]).^2 ./ (g[3:N]-2*g[2:N-1]+g[1:N-2]); |

∴ |

lh=log.(2,h); d=log.(2,abs.(g.-1)); b=log.(2,abs.(a.-1)); |

∴ |

clf(); plot(lh,d,"-o"); plot(lh,b,"-x"); plot([-10,-20],[-10,-20],"k"); plot([-10,-20],[-10,-30],"g"); xlabel("log(h)"); ylabel("g, a"); grid("on"); savefig("L02Fig03.eps"); |

∴ |

|

Analysis reinforces the above numerical experiment. First-order convergence implies the distance to the limit decreases during iteration as

Several approaches may be used in construction of an approximating sequence . The approaches exemplified below for , can be generalized when is some other type of mathematical object.

Returning to the Leibniz series

the sequence of approximations is with general term

Note that successive terms are obtained by an additive correction

Another example, again giving an approximation of is the Srinivasa Ramanujan series

that can be used to obtain many digits of accuracy with just a few terms.

An example of the generalization of this approach is the Taylor series of a function. For example, the familiar sine power series

is analogous, but with rationals now replaced by monomials, and the limit is now a function . The general term is

and the same type of additive correction appears, this time for functions,

Approximating sequences need not be constructed by adding a correction. Consider the approximation of given by Wallis's product (1656)

for which

Another famous example is the Viète formula from 1593

in which the correction is multiplicative with numerators given by nested radicals. Similar to the symbol for addition, and the symbol for multiplication, the N symbol is used to denote nested radicals

In the case of the Viète formula, , for all .

Yet another alternative is that of continued fractions, with one possible approximation of given by

| (6) |

A notation is introduced for continued fractions using the symbol

Using this notation, the sequences arising in the continued fraction representation of are , chosen as for , and for .

The above correction techniques used arithmetic operations. The repeated radical coefficients in the Viète formula suggest consideration of repeated composition of arbitrary functions to construct the approximant

insect

This is now a general framework, in which all of the preceeding correction approaches can be expressed. For example, the continued fraction formula (6) is recovered through the functions

and evaluation of the composite function at

This general framework is of significant current interest since such composition of nonlinear functions is the basis of deep neural network approximations.

The cornerstone of scientific computing is construction of approximating sequences.

The problem of number approximation leads to definition of concepts and techniques that can be extended to more complex mathematical objects.

A primary objective is the construction of efficient approximating sequences, with efficiency characterized through concepts such as order and speed of convergence.

Though often enforced analytically, limiting behavior of the sequence is of secondary interest. As seen in the approximation of a derivative, the approximating sequence might diverge, yet give satisfactory answers for some range of indices.

Though by far the most widely studied and used approach to approximation, additive corrections are not the only possibility.

Alternative correction techniques include: multiplication, continued fractions, or repeated function composition.

Repeated composition of functions is used in constructing deep neural network approximants.