|

A central interest in scientific computation is to seek simple descriptions of complex objects. A typical situation is specifying an instance of some object of interest through an -tuple with large . Assuming that addition and scaling of such objects can cogently be defined, a vector space is obtained, say over the field of reals with an Euclidean distance, . Examples include for instance recordings of medical data (electroencephalograms, electrocardiograms), sound recordings, or images, for which can easily reach into the millions. A natural question to ask is whether all the real numbers are actually needed to describe the observed objects, or perhaps there is some intrinsic description that requires a much smaller number of descriptive parameters, that still preserves the useful idea of linear combination. The mathematical transcription of this idea is a vector subspace.

The above states a vector subspace must be closed under linear combination, and have the same vector addition and scaling operations as the enclosing vector space. The simplest vector subspace of a vector space is the null subspace that only contains the null element, . In fact any subspace must contain the null element , or otherwise closure would not be verified for the particular linear combination . If , then is said to be a proper subspace of , denoted by .

Setting components equal to zero in the real space defines a proper subspace whose elements can be placed into a one-to-one correspondence with the vectors within . For example, setting component of equal to zero gives that while not a member of , it is in a one-to-one relation with . Dropping the last component of , gives vector , but this is no longer a one-to-one correspondence since for some given , the last component could take any value.

∴ |

m=3; x=[1; 2; 0]; xp=x[1:2] |

(1)

∴ |

y=[1; 2; 3]; yp=y[1:2] |

(2)

∴ |

Vector subspaces arise in decomposition or partitioning of a vector space. The converse, composition of vector spaces , is defined in terms of linear combination. A vector can be obtained as the linear combination

but also as

for some arbitrary . In the first case, is obtained as a unique linear combination of a vector from the set with a vector from . In the second case, there is an infinity of linear combinations of a vector from with another from to the vector . This is captured by a pair of definitions to describe vector space composition.

Since the same scalar field, vector addition, and scaling is used , it is more convenient to refer to vector space sums simply by the sum of the vector sets , or , instead of specifying the full tuplet for each space. This shall be adopted henceforth to simplify the notation.

∴ |

u=[1; 0; 0]; v=[0; 2; 3]; vp=[0; 1; 3]; w=[1; 1; 0]; [u+v vp+w] |

(3)

∴ |

In the previous example, the essential difference between the two ways to express is that , but , and in general if the zero vector is the only common element of two vector spaces then the sum of the vector spaces becomes a direct sum.In practice, the most important procedure to construct direct sums or check when an intersection of two vector subspaces reduces to the zero vector is through an inner product.

The above concept of orthogonality can be extended to other vector subspaces, such as spaces of functions. It can also be extended to other choices of an inner product, in which case the term conjugate vector spaces is sometimes used. The concepts of sum and direct sum of vector spaces used linear combinations of the form . This notion can be extended to arbitrary linear combinations.

Note that for real vector spaces a member of the span of the vectors is the vector obtained from the matrix vector multiplication

From the above, the span is a subset of the co-domain of the linear mapping .

The wide-ranging utility of linear algebra results from a complete characterization of the behavior of a linear mapping between vector spaces , . For some given linear mapping the questions that arise are:

Can any vector within be obtained by evaluation of ?

Is there a single way that a vector within can be obtained by evaluation of ?

Linear mappings between real vector spaces , have been seen to be completely specified by a matrix . It is common to frame the above questions about the behavior of the linear mapping through sets associated with the matrix . To frame an answer to the first question, a set of reachable vectors is first defined.

By definition, the column space is included in the co-domain of the function , , and is readily seen to be a vector subspace of . The question that arises is whether the column space is the entire co-domain that would signify that any vector can be reached by linear combination. If this is not the case then the column space would be a proper subset, , and the question is to determine what part of the co-domain cannot be reached by linear combination of columns of . Consider the orthogonal complement of defined as the set vectors orthogonal to all of the column vectors of , expressed through inner products as

This can be expressed more concisely through the transpose operation

and leads to the definition of a set of vectors for which

Note that the left null space is also a vector subspace of the co-domain of , . The above definitions suggest that both the matrix and its transpose play a role in characterizing the behavior of the linear mapping , so analagous sets are define for the transpose .

Examples. Consider a linear mapping , defined by , with .

For , ,

the column space is the -axis, and the left null space is the -plane.

For , ,

the columns of are colinear, , and the column space is the -axis, and the left null space is the -plane, as before.

For , ,

the column space is the -plane, and the left null space is the -axis.

For , ,

the same , are obtained.

For , ,

since , the orthogonality condition is satisfied by vectors of form , .

The above low dimensional examples are useful to gain initial insight into the significance of the spaces . Further appreciation can be gained by applying the same concepts to processing of images. A gray-scale image of size by pixels can be represented as a vector with components, . Even for a small image with pixels along each direction, the vector would have components. An image can be specified as a linear combination of the columns of the identity matrix

with the gray-level intensity in pixel . Similar to the inclined plane example from §1, an alternative description as a linear combination of another set of vectors might be more relevant. One choice of greater utility for image processing mimics the behavior of the set that extends the second example in §1, would be for

For the simple scalar mapping , , the condition implies either that or . Note that can be understood as defining a zero mapping . Linear mappings between vector spaces, , can exhibit different behavior, and the condtion , might be satisfied for both , and . Analogous to the scalar case, can be understood as defining a zero mapping, .

In vector space , vectors related by a scaling operation, , , are said to be colinear, and are considered to contain redundant data. This can be restated as , from which it results that . Colinearity can be expressed only in terms of vector scaling, but other types of redundancy arise when also considering vector addition as expressed by the span of a vector set. Assuming that , then the strict inclusion relation holds. This strict inclusion expressed in terms of set concepts can be transcribed into an algebraic condition.

Introducing a matrix representation of the vectors

allows restating linear dependence as the existence of a non-zero vector, , such that . Linear dependence can also be written as , or that one cannot deduce from the fact that the linear mapping attains a zero value that the argument itself is zero. The converse of this statement would be that the only way to ensure is for , or , leading to the concept of linear independence.

| (4) |

are , ,…,.

Vector spaces are closed under linear combination, and the span of a vector set defines a vector subspace. If the entire set of vectors can be obtained by a spanning set, , extending by an additional element would be redundant since . This is recognized by the concept of a basis, and also allows leads to a characterization of the size of a vector space by the cardinality of a basis set.

are linearly independent;

.

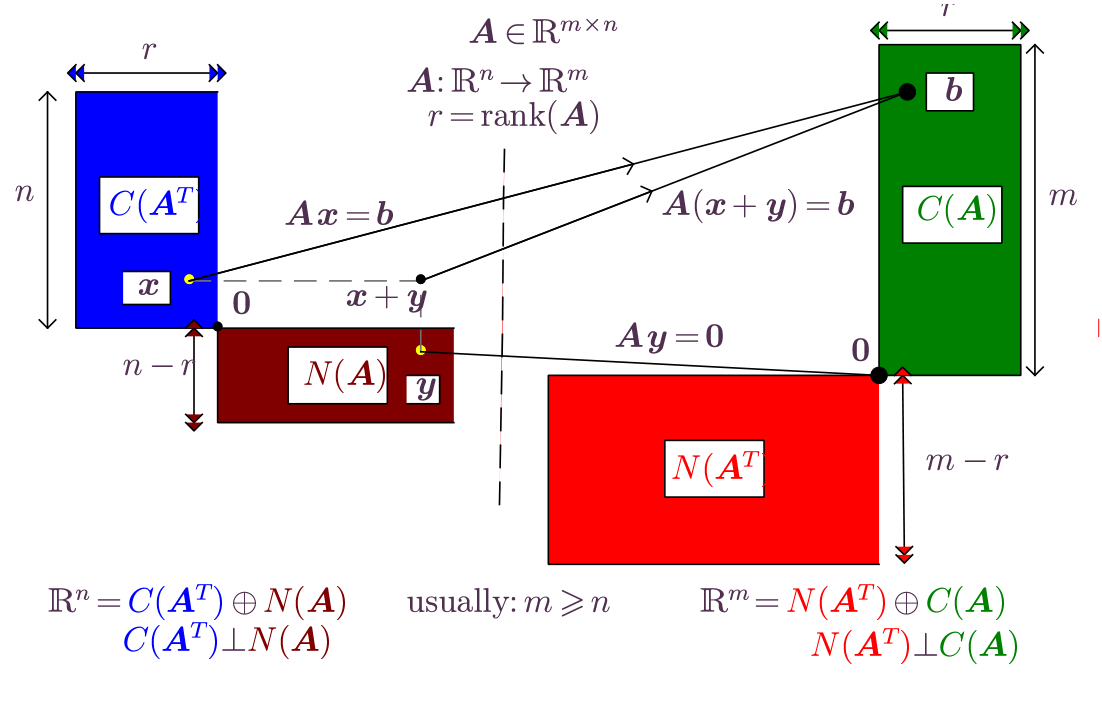

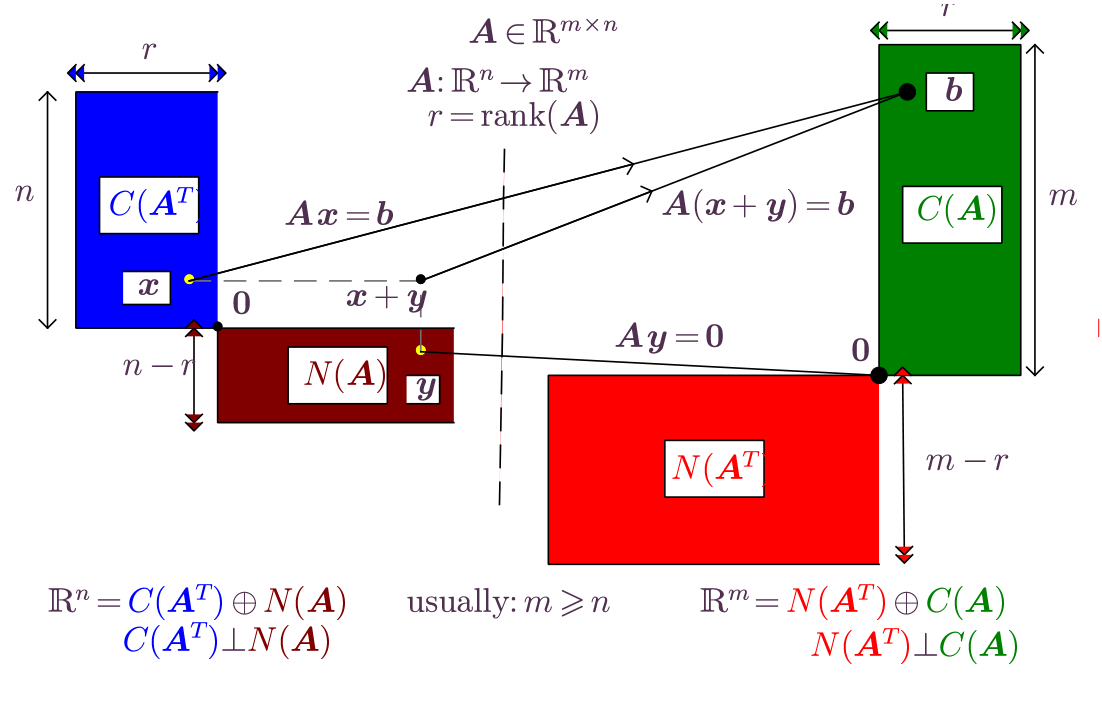

The domain and co-domain of the linear mapping , , are decomposed by the spaces associated with the matrix . When , , the following vector subspaces associated with the matrix have been defined:

the column space of

the row space of

the null space of

the left null space of , or null space of

A partition of a set has been introduced as a collection of subsets such that any given element belongs to only one set in the partition. This is modified when applied to subspaces of a vector space, and a partition of a set of vectors is understood as a collection of subsets such that any vector except belongs to only one member of the partition.

Linear mappings between vector spaces can be represented by matrices with columns that are images of the columns of a basis of

Consider the case of real finite-dimensional domain and co-domain, , in which case ,

Example

leading to

∴ |

n=15; θ=2*π/n; c=cos(θ); s=sin(θ); A=[c -s; s c]; |

∴ |

v=[1; 0]; x=[0]; y=[0]; |

∴ |

for k=0:n global x,y,v,A x=[x v[1] 0]; y=[y v[2] 0] v = A*v end |

∴ |

clf(); plot(x',y'); axis("equal"); |

∴ |

The column space of is a vector subspace of the codomain, , but according to the definition of dimension if there remain non-zero vectors within the codomain that are outside the range of ,

All of the non-zero vectors in , namely the set of vectors orthogonal to all columns in fall into this category. The above considerations can be stated as

The question that arises is whether there remain any non-zero vectors in the codomain that are not part of or . The fundamental theorem of linear algebra states that there no such vectors, that is the orthogonal complement of , and their direct sum covers the entire codomain .

, and

.

Proof. by definition of direct sum, sum of vector subspaces. To prove that , consider . Since and write

and since expression is unique, it results that . Now assume (i),(ii) and establish an unique decomposition. Assume there might be two decompositions of , , , with , Obtain , or . Since and it results that , and , , i.e., the decomposition is unique.

In the vector space the subspaces are said to be orthogonal complements if , and . When , the orthogonal complement of is denoted as , .

, the direct sum of the column space and left null space is the codomain of the mapping

, the direct sum of the row space and null space is the domain of the mapping

and , the column space is orthogonal to the left null space, and they are orthogonal complements of one another,

and , the row space is orthogonal to the null space, and they are orthogonal complements of one another,

|

Consideration of equality between sets arises in proving the above theorem. A standard technique to show set equality , is by double inclusion, . This is shown for the statements giving the decomposition of the codomain . A similar approach can be used to decomposition of .

(column space is orthogonal to left null space).

Proof. Consider arbitrary . By definition of , such that , and by definition of , . Compute , hence for arbitrary , and .

( is the only vector both in and ).

Proof. (By contradiction, reductio ad absurdum). Assume there might be and and . Since , such that . Since , . Note that since , contradicting assumptions. Multiply equality on left by ,

thereby obtaining , using norm property 3. Contradiction.

Proof. (iii) and (iv) have established that are orthogonal complements

By Lemma 2 it results that .

The remainder of the FTLA is established by considering , e.g., since it has been established in (v) that , replacing yields , etc.

Summary.

Vector subspaces are subsets of a vector space closed under linear combination

The simplest vector subspace is

Linear mappings are represented by matrices

Associated with matrix that represents mapping are four fundamental subspaces:

the column space of containing vectors reachable by ,

the left null space of containing vectors orthogonal to columns ,

the row space of

the null space of