|

The goal of scientific computation is the construction of quantitatively verifiable models of the natural world. Scientific computation intertwines mathematics, algorithm formulation, software and hardware engineering with domain-specific knowledge from the physical, life, or social sciences. It is the fusion of these disciplines that imparts a distinct identity to scientific computing, and mathematical concepts must be complemented by practical modeling considerations to achieve successful computational simulation.

Human interpretation of the complexity of the natural world has historically led to parallel developments in formulation of abstract concepts, construction of models, and use of computational aids. This is the case on the long time span from prehistoric formulation of the abstract concept of a number and use of tally sticks, to current efforts based on quantum mechanics and superconducting qubit electronics. A dichtomy arises between the formulation of mathematical concepts asserted purely by reason and practicable predictions of the natural world. The conflicting approaches are reconciled by modeling and approximation. Domain sciences such as physics, biology, or sociology construct the relevant models. Comparison of model predictions to observation can sometimes be carried out through devices as simple as Galileo's inclined plane. The much higher complexity of models of airplane flight, or cancer progression, or activity on social media requires different tools, paradigmatically represented by the modern digital computer. In this more complex setting, scientific computing is instrumental in extracting verifiable predictions from models.

Central to computational prediction is the idea of approximation: finding a simple mathematical representation of some complex model of reality. Archimedes approximated the area of a parabolic segment by that an increasing number of inscribed triangles. Nowadays meteorological observations are input to a digital computer to predict future rainfall. The weather model implemented on the computer contains numerous approximations. Remarkably, both models rely on the same technique of additive approximation: summation of successive corrections to obtain increased accuracy.

One of the goals of this textbook is to highlight how computational methods arise as expressions of just a few approximation approaches. Additive corrections underlie many methods ranging from interpolation to numerical integration, and benefit from a remarkably complete theoretical framework within linear algebra. Alternatively, successive function composition is a distinct approximation idea, with a theoretical framework that is not yet complete, and whose expression leads to the burgeoning field of "machine learning". The process by which a specific approximation approach leads to different types of algorithms is a unifying theme of the presentation, and hopefully not only illuminates well-known algorithms, but also serves as a guide to future research.

The presentation of topics and ideas is at the graduate level of study, but with a focus on unifying ideas rather than detailed techniques. Much of traditional numerical methods and some associated analysis is presented and seen to be related to real analysis and linear algebra. The same theoretical framework can be extended to probability spaces and account for random phenomena. The nonlinear approach to approximation that characterizes artificial neural networks requires a different conceptual framework which has yet to be crystallized. Though numerical methods are prevalent in scientific computation, this text also considers alternatives that should be part of a computational scientist's toolkit such as symbolic, topological, and geometric computation.

Scientific computing is not a theoretical exercise, and successful simulation relies on acquiring the skill set to implement mathematical concepts and approximation techniques into efficient code. This text intersperses method presentation and implementation, mostly in the Julia language. An associated electronic version of his textbook uses the TeXmacs scientific editing platform to enable live documents with embedded computational examples, allowing immediate experimentation with the presented algorithms.

I. Number Approximation 15

Lecture 1: Number Approximation 17

1.Numbers 17

1.1.Number sets 17

1.2.Quantification 17

1.3.Computer number sets 18

2.Approximation 21

2.1.Axiom of floating point arithmetic 21

2.2.Cummulative floating point operations 21

3.Successive approximations 23

3.1.Sequences in 23

3.2.Cauchy sequences 25

3.3.Sequences in 26

Summary. 26

Lecture 2: Approximation techniques 26

1.Rate and order of convergence 26

2.Convergence acceleration 30

2.1.Aitken acceleration 30

3.Approximation correction types 31

3.1.Additive corrections 32

3.2.Multiplicative corrections 32

3.3.Continued fractions 33

3.4.Composite corrections 33

Summary. 34

Lecture 3: Problems and Algorithms 34

1.Mathematical problems 34

1.1.Formalism for defining a mathematical problem 34

1.2.Vector space 36

1.3.Norm 36

1.4.Condition number 36

2.Solution algorithm 37

2.1.Accuracy 37

2.2.Stability 37

Summary. 38

II. Linear Approximation 39

Lecture 4: Linear Combinations 43

1.Finite-dimensional vector spaces 43

1.1.Overview 43

1.2.Real vector space 43

Column vectors. 43

Row vectors. 44

Compatible vectors. 44

1.3.Working with vectors 44

Ranges. 44

2.Linear combinations 45

2.1.Linear combination as a matrix-vector product 45

Linear combination. 45

Matrix-vector product. 45

2.2.Linear algebra problem examples 45

Linear combinations in . 45

Linear combinations in and . 46

Summary. 47

Lecture 5: Linear Functionals and Mappings 48

1.Functions 48

1.1.Relations 48

Homogeneous relations. 48

1.2.Functions 48

1.3.Linear functionals 49

1.4.Linear mappings 50

2.Measurements 50

2.1.Equivalence classes 50

2.2.Norms 51

2.3.Inner product 53

3.Linear mapping composition 54

3.1.Matrix-matrix product 54

Lecture 6: Fundamental Theorem of Linear Algebra 56

1.Vector Subspaces 56

1.1.Definitions 56

1.2.Vector subspaces of a linear mapping 57

2.Linear dependence, vector space basis and dimension 59

2.1.Linear dependence 59

2.2.Basis and dimension 60

2.3.Dimension of matrix spaces 60

3.The FTLA 61

3.1.Partition of linear mapping domain and codomain 61

3.2.FTLA statement 62

Lecture 7: The Singular Value Decomposition 63

1.Mappings as data 63

1.1.Vector spaces of mappings and matrix representations 63

1.2.Measurement of mappings 64

2.The Singular Value Decomposition (SVD) 66

2.1.Orthogonal matrices 66

2.2.Intrinsic basis of a linear mapping 67

3.SVD solution of linear algebra problems 69

Change of coordinates. 70

Best 2-norm approximation. 70

The pseudo-inverse. 70

Lecture 8: Least Squares Approximation 70

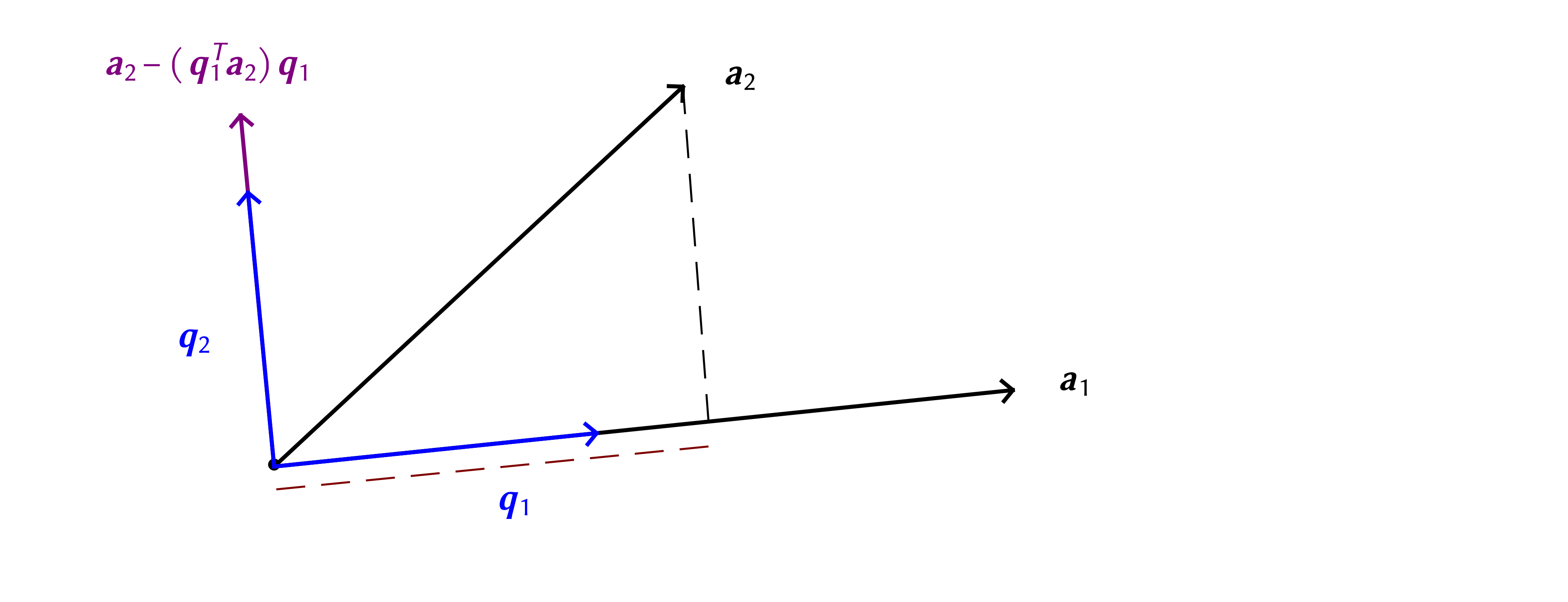

1.Projection 70

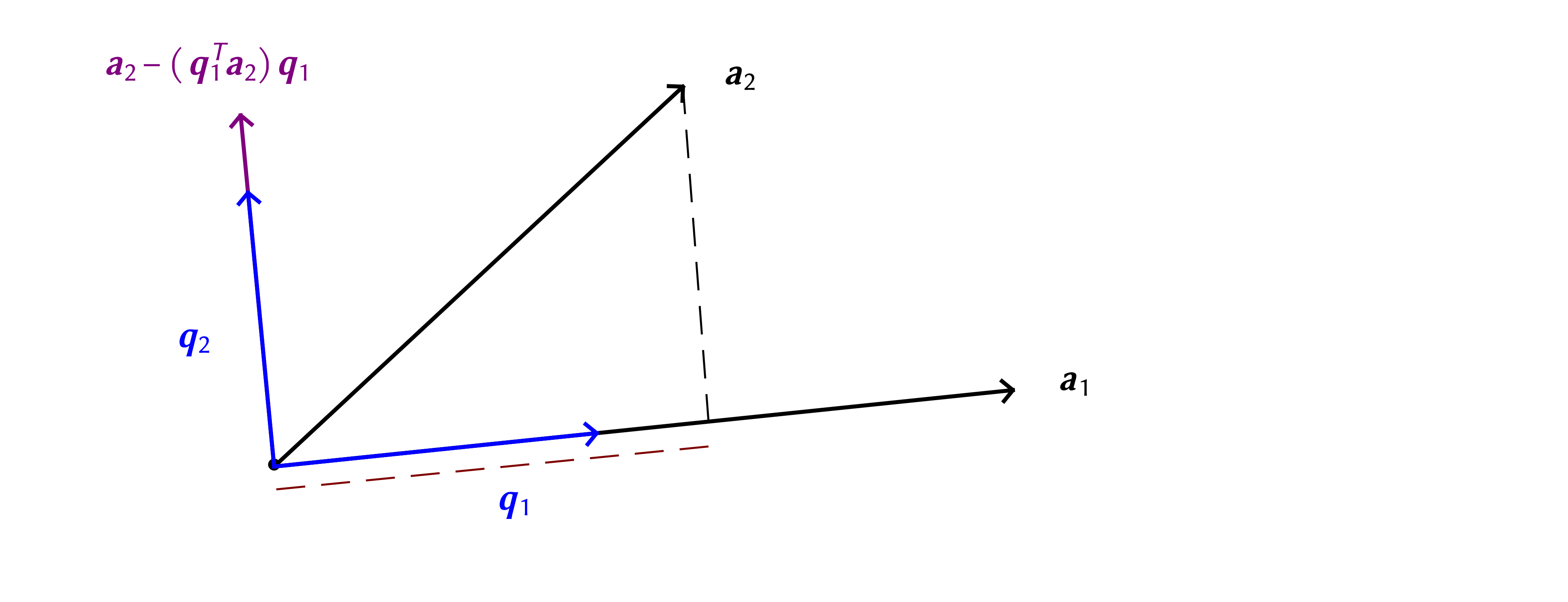

2.Gram-Schmidt 72

3. solution of linear algebra problems 73

3.1.Transformation of coordinates 73

3.2.General orthogonal bases 74

3.3.Least squares 75

Lecture 9: Reduced Systems 78

1.Projection of mappings 78

1.1.Reduced matrices 78

1.2.Dynamical system model reduction 79

2.Reduced bases 80

2.1.Correlation matrices 80

Correlation coefficient. 80

Patterns in data. 81

3.Stochastic systems - Karhunen-Loève theorem 82

Lecture 10: Square Linear Systems 83

1.Gaussian elimination and row echelon reduction 83

2.-factorization 84

2.1.Example for 84

2.2.General case 85

3.Matrix inverse 88

3.1.Gauss-Jordan algorithm 88

Lecture 11: Algorithm Variants 89

1.Determinants 90

1.1.Cross product 93

2.Structured Matrices 93

3.Cholesky factorization of positive definite hermitian matrices 94

3.1.Symmetric matrices, hermitian matrices 94

3.2.Positive-definite matrices 95

3.3.Symmetric factorization of positive-definite hermitian matrices 95

Lecture 12: The Eigenvalue Problem 96

1.Definitions 96

1.1.Coordinate transformations 97

1.2.Paradigmatic eigenvalue problem solutions 97

1.3.Matrix eigendecomposition 99

1.4.Matrix properties from eigenvalues 101

1.5.Matrix eigendecomposition applications 101

2.Computation of the SVD 102

Lecture 13: Power Iterations 102

1.Reduction to triangular form 102

2.Power iteration for real symmetric matrices 103

2.1.The power iteration idea 104

2.2.Rayleigh quotient 104

2.3.Refining the power iteration idea 106

Lecture 14: Interpolation 109

1.Function spaces 109

1.1.Infinite dimensional basis set 109

1.2.Alternatives to the concept of a basis 110

Fourier series. 111

Taylor series. 111

1.3.Common function spaces 111

2.Interpolation 112

2.1.Additive corrections 112

2.2.Polynomial interpolation 112

Monomial form of interpolating polynomial. 112

Lagrange form of interpolating polynomial. 114

Newton form of interpolating polynomial. 119

3.Interpolation error 121

3.1.Error minimization - Chebyshev polynomials 123

3.2.Best polynomial approximant 125

Lecture 15: Function and Derivative Interpolation 125

1.Interpolation in function and derivative values - Hermite interpolation 125

Monomial form of interpolating polynomial. 125

Lagrange form of interpolating polynomial. 127

Newton form of interpolating polynomial. 128

Lecture 16: Piecewise Interpolation 130

1.Splines 130

Constant splines (degree 0). 130

Linear splines (degree 1). 131

Quadratic splines (degree 2). 131

Cubic splines (degree 3). 132

2.-splines 133

Lecture 17: Spectral approximations 136

1.Trigonometric basis 136

1.1.Fourier series - Fast Fourier transform 136

1.2.Fast Fourier transform 137

1.3.Data-sparse matrices from Sturm-Liouville problems 137

2.Wavelet approximations 138

Lecture 18: Best Approximant 140

1.Best approximants 140

2.Two-norm approximants in Hilbert spaces 141

3.Inf-norm approximants 142

Lecture 19: Derivative Approximation 145

1.Numerical differentiation based upon polynomial interpolation 145

Monomial basis. 145

Newton basis (finite difference calculus). 146

2.Taylor series methods 149

3.Numerical differentiation based upon piecewise polynomial interpolation 150

-spline basis. 150

Lecture 20: Quadrature 151

1.Numerical integration 151

Monomial basis. 151

Lagrange basis. 151

Moment method. 153

2.Gauss quadrature 154

Lecture 21: Ordinary Differential Equations - Single Step Methods 156

1.Ordinary differential equations 156

2.Differentiation operator approximation from polynomial interpolants 158

2.1.Euler forward scheme 158

2.2.Backward Euler scheme 158

2.3.Leapfrog scheme 159

Lecture 22: Ordinary Differential Equations - Multistep Methods 159

1.Adams-Bashforth and Adams-Moulton schemes 159

2.Simultaneous operator approximation - linear multistep methods 160

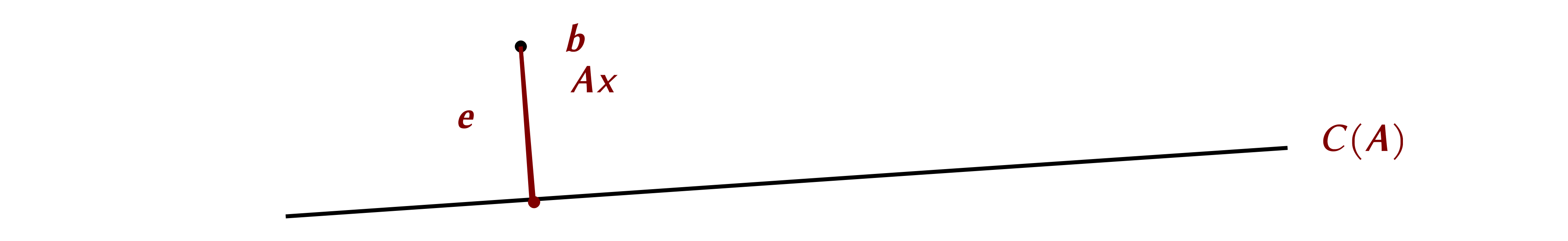

3.Consistency, convergence, stability 161

3.1.Model problem 161

3.2.Boundary locus method 162

Lecture 23: Nonlinear scalar operator equations 165

1.Root-finding algorithms 165

1.1.First-degree polynomial approximants 165

Secant method. 165

Newton-Raphson method. 166

1.2.Second-degree polynomial approximants 167

Halley's method. 167

2.Composite approximations 168

3.Fixed-point iteration 168

Lecture 24: Nonlinear Vector Operator Equations 170

1.Multivariate root-finding algorithms 170

2.Quasi-Newton methods 171

3.Gradient descent methods 172

Lecture 25: Introduction to nonlinear approximation 172

1.Historical analogue - operator calculus 173

1.1.Heavisde study of telgraphist equation 173

1.2.Development of mathematical theory of operator calculus 173

2.Basic approximation theory 174

2.1.Linear approximation example 174

2.2.Non-Linear approximation example 175

3.Nonlinear approximation by composition 176

Lecture 26: Data-Driven Nonlinear Approximation 176

1.Greedy bases 176

2.Artificial neural networks 176

3.Stochastic gradient descent 176

Lecture 27: Differential Conservation Laws 177

1.The relevance of physics for scientific computation 177

2.Conservation laws 179

Banking example. 179

Local formulations. 181

3.Special forms of conservation laws 181

Second law of dynamics. 181

Advection equation. 181

Diffusion equation. 182

Combined effects. 182

Steady-state transport. 182

Separation of variables. 183

Lecture 28: Linear Operator Splitting 184

1.Poisson equation discretization 184

2.Matrix splitting iteration 186

3.Convergence analysis 186

Lecture 29: Gradient Descent Methods 191

1.Spatially dependent diffusivity 191

2.Steepest descent 193

3.Conjugate gradient 195

Lecture 30: Irregular Sparsity 196

1.Finite element discretization 196

2.Krylov methods, Arnoldi iteration 199

3.GMRES 201

Lecture 31: Incomplete Operator Decomposition 201

1.Finite difference Helmholtz equation 201

2.Arnoldi iteration 202

3.Lanczos iteration 204

Lecture 32: Bases for Incomplete Decomposition 205

1.Preconditioning 205

2.Multigrid 206

3.Random multigrid and stochastic descent 208

Lecture 33: Multiple Operators 209

1.Semi-discretization 209

2.Method of lines 210

3.Implicit-explicit methods 211

Lecture 34: Operator-Induced Bases 212

1.Spectral methods 212

2.Quasi-spectral methods 213

3.Fast transforms 215

Lecture 35: Nonlinear Operators 216

1.Advection equation 216

2.Convection equation 217

3.Discontinuous solutions 219

III. Nonlinear Approximation 223

Most scientific disciplines introduce an idea of the amount of some entity or property of interest. Furthermore, the amount is usually combined with the concept of a number, an abstraction of the observation that the two sets {Mary, Jane, Tom} and {apple, plum, cherry} seem quite different, but we can match one distinct person to one distinct fruit as in {Maryplum, Janeapple, Tomcherry}. In contrast, we cannot do the same matching of distinct persons to a distinct color from the set {red, green}, and one of the colors must be shared between two persons. Formal definition of the concept of a number from the above observations is surprisingly difficult since it would be self-referential due to the apperance of the numbers “one” and “two”. Leaving this aside, the key concept is that of quantity of some property of interest that is expressed through a number.

Several types of numbers have been introduced in mathematics to express different types of quantities, and the following will be used throughout this text:

The set of natural numbers, , infinite and countable, ;

The set of integers, , infinite and countable;

The set of rational numbers , infinite and countable;

The set of real numbers, infinite, not countable, can be ordered;

The set of complex numbers, , infinite, not countable, cannot be ordered.

These sets of numbers form a hierarchy, with . The size of a set of numbers is an important aspect of its utility in describing natural phenomena. The set has three elements, and its size is defined by the cardinal number, . The sets have an infinite number of elements, but the relation

defines a one-to-one correspondence between and , so these sets are of the same size denoted by the transfinite number (aleph-zero). The rationals can also be placed into a one-to-one correspondence with , hence

In contrast there is no one-to-one mapping of the reals to the naturals, and the cardinality of the reals is (Fraktur-script c). Georg Cantor established set theory and introduced a proof technique known as the diagonal argument to show that . Intuitively, there are exponentially more reals than naturals.

One of the foundations of the scientific method is quantification, ascribing numbers to phenomena of interest. To exemplify the utility of different types of number to describe natural phenomena, consider common salt (sodium chloride, Fig. ) which has the chemical formula NaCl with the sodium ions () and chloride ions () spatially organized in a cubic lattice, with an edge length Å (1 Å = m) between atoms of the same type. Setting the origin of a Cartesian coordinate system at a sodium atom, the position of some atom within the lattice is

Sodium atoms are found positions where is even, while chloride atoms are found at positions where is odd. The Cartesian coordinates describe some arbitrary position in space, which is conceptualized as a continuum and placed into one-to-one correspondence with . A particular lattice position can be specified simply through a label consisting of three integers . The position can be recovered through a scaling operation

and the number that modifies the length scale from to , it is called a scalar.

A computer has a finite amount of memory, hence cannot represent all numbers, but rather subsets of the above number sets. Current digital computers internally use numbers represented through binary digits, or bits. Many computer number types are defined for specific purposes, and are often encountered in applications such as image representation or digital data acquisition. Here are the main types.

The number types uint8, uint16, uint32, uint64 represent subsets of the natural numbers (unsigned integers) using 8, 16, 32, 64 bits respectively. An unsigned integer with bits can store a natural number in the range from to . Two arbitrary natural numbers, written as can be added and will give another natural number, . In contrast, addition of computer unsigned integers is only defined within the specific range to . If , the result might be displayed as the maximum possible value or as .

The number types int8, int16, int32, int64 represent subsets of the integers. One bit is used to store the sign of the number, so the subset of that can be represented is from to .

Computers approximate the real numbers through the set of floating point numbers. Floating point numbers that use bits are known as single precision, while those that use are double precision. A floating point number is stored internally as where , are bits within the mantissa of length , and , are bits within the exponent, along with signs for each. The default number type is usually double precision, more concisely referred to Float64. Common irrational constants such as , are predefined as irrationals and casting to Float64 or Float32 gives floating point approximation. Unicode notation is recognized. Specification of a decimal point indicates a floating point number; its absence indicates an integer.

The approximation of the reals by the floats is characterized by: floatmax(), the largest float, floatmin the smallest positive float, and eps() known as machine epsilon. Machine epsilon highlights the differences between floating point and real numbers since it may be informally defined as the smallest number of form that satisfies . If of course implies , but floating points exhibit “granularity”, in the sense that over a unit interval there are small steps that are indistinguishable from zero due to the finite number of bits available for a float leading to being indistiguishable from 1, and the apparently endless loop shown below actually terminates.

The granularity of double precision expressed by machine epsilon is sufficient to represent natural phenomena, and floating point errors can usually be kept under control,

Keep in mind that perfect accuracy is a mathematical abstraction, not encountered in nature. In fields such as sociology or psychology 3 digits of accuracy are excellent, in mechanical engineering this might increase to 6 digits, or in electronic engineering to 8 digits. The most precisely known physical constant is the Rydberg constant known to 12 digits, hence a mathematical statement such as

is unlikely to have any real significance, while

is much more informative.

Within the reals certain operations are undefined such as . Special float constants are defined to handle such situations: Inf is a float meant to represent infinity, and NaN (“not a number”) is meant to represent an undefinable result of an arithmetic operation.

Complex numbers are specified by two reals, in Cartesian form as , or in polar form as , , . The computer type complex is similarly defined from two floats and the additional constant I is defined to represent . Functions are available to obtain the real and imaginary parts within the Cartesian form, or the absolute value and argument of the polar form.

The reals form an algebraic structure known as a field . The set of floats together with floating point addition and multiplication are denoted as . Operations with floats do not have the same properties as the reals, but are assumed to have a relative error bounded by machine epsilon

where is the floating point representation of . The above is restated

and accepted as an axiom for use in error analysis involving floating point arithmetic. Computer number sets are a first example of approximation: replacing some complicated object with a simpler one. It is one of the key mathematical ideas studied throughout this text.

Care should be exercised about the cummulative effect of many floating point operations. An informative example is offered by Zeno's paradox of motion, that purports that fleet-footed Achilles could never overcome an initial head start of given to the lethargic Tortoise since, as stated by Aristotle:

The above is often formulated by considering that the first half of the initial head start must be overcome, then another half and so on. The distance traversed after such steps is

Calculus resolves the paradox by rigorous definition of the limit and definition of velocity as , , .

Undertake a numerical invesigation and consider two scenarios, with increasing or decreasing step sizes

In associativity ensures .

Irrespective of the value for , in floating point arithmetic. Recall however that computers use binary representations internally, so division by powers of two might have unique features (indeed, it corresponds to a bit shift operation). Try subdividing the head start by a different number, perhaps to get an “irrational” numerical investigation of Zeno's paradox of motion. Define now the distance traversed by step sizes that are scaled by starting from one to , traversed by step sizes scaled by starting from

Again, in the reals the above two expressions are equal, , but this is no longer verified computationally for all , not even within a tolerance of machine epsilon.

This example gives a first glimpse of the steps that need to be carried out in addition to mathematical analysis to fully characterize an algorithm. Since , a natural question is whether one is more accurate than the other. For some arbitrary ratio , the exact value is known

and can be used to evaluate the errors , .

Carrying out the computations leads to results in Fig. .

Note that errors are about the size of machine epsilon for , but are zero for , it seems that the summation ordering gives the exact value. A bit of reflection reinforces this interpretation: first adding small quantities allows for carry over digits to be accounted for.

This example is instructive beyond the immediate adage of “add small quantities first”. It highlights the blend of empirical and analytical approaches that is prevalent in scientific computing.

Single values given by some algorithm are of little value in the practice of scientific computing. The main goal is the construction of a sequence of approximations that enables assessment of the quality of an approximation. Recall from calculus that converges to if can be made as small as desired for all beyond some threshold. In precise mathematical language this is stated through:

Though it might seem natural to require a sequence of approximations to converge to an exact solution

such a condition is problematic on multiple counts:

the exact solution is rarely known;

the best approximation might be achieved for some finite range , rather than in the limit.

Both situations arise when approximating numbers and serve as useful reference points when considering approximation other types of mathematical objects such as functions. For example, the number is readily defined in geometric terms as the ratio of circle circumference to diameter, but can only be approximately expressed by a rational number, e.g., . The exact value of is only obtained as the limit of an infinite number of operations with rationals. There are many such infinite representations, one of which is the Leibniz series

No finite term

of the above Leibniz series equals , i.e.,

Rather, the Leibniz series should be understood as an algorithm, i.e., a sequence of elementary operations that leads to succesively more accurate approximations of

Complex analysis provides a convergence proof starting from properties of the function

For the sequence of partial sums of a geometric series converges uniformly

and can be integrated term by term to give

This elegant result does not address however the points raised above: if were not known, how could the convergence of the sequence be assessed? A simple numerical experiment indicates that the familiar value of is only recovered for large , with 10000 terms insufficient to ensure five significant digits.

Instead of evaluating distance to an unknown limit, as in , one could evaluate if terms get closer to one another as in , a condition that can readily be checked in an algorithm.

Note that the distance between any two terms after the threshold must be smaller than an arbitrary tolerance . For example the sequence is not a Cauchy sequence even though the distance between successive terms can be made arbitrarily small

Verification of decreasing successive distance is therefore a necessary but not sufficient condition to assess whether a sequence is a Cauchy sequence. Furthermore, the distance between successive iterates is not necessarily an indication of the distance to the limit. Reprising the Leibniz example, successive terms can be further apart than the distance to the limit, though terms separated by 2 are closer than the distance to the limit (a consequence of the alternating Leibniz series signs)

Another question is whether a Cauchy sequence is itself convergent. For sequences of reals this is true, but the Leibniz sequence furnishes a counterexample since it contains rationals and converges to an irrational. Such aspects that arise in number approximation sequences become even more important when considering approximation sequences composed of vectors or functions.

Consideration of floating point arithmetic indicates adaptation of the mathematical concept of convergence is required in scientific computation. Recall that machine epsilon is that largest number such that is true, and characterizes the granularity of the floating point system. A reasonable adaptation of the notion of convergence might be:

What emerges is the need to consider a degree of uncertainty in an approximating sequence. If the uncertainty can be bounded to the intrinsic granularity of the number system, a good approximation is obtained.

The problem of approximating numbers uncovers generic aspects of scientific computing:

different models of some phenomenon are possible and it is necessary to establish correspondence between models and of a model to theory;

scientific computation seeks to establish viable approximation techniques for the mathematical objects that arise in models;

correspondence of a model to theory is established through properties of approximation sequences, not single results of a particular approximation technique;

physical limitations of computer memory require revisiting of mathematical concepts to characterize approximation sequence behavior, and impart a stochastic aspect to approximation techniques;

computational experiments are a key aspect, giving an empirical aspect to scientific computing that is not found in deductive or analytical mathematics.

The objective of scientific computation is to solve some problem by constructing a sequence of approximations . The condition suggested by mathematical analysis would be , with . As already seen in the Leibniz series approximation of , acceptable accuracy might only be obtained for large . Since could be an arbitrarily complex mathematical object, such slowly converging approximating sequences are of little practical interest. Scientific computing seeks approximations of the solution with rapidly decreasing error. This change of viewpoint with respect to analysis is embodied in the concepts of rate and order of convergence.

As previously discussed, the above definition is of limited utility since:

The solution is unknown;

The limit is impractical to attain.

Sequences converge faster for higher order or lower rate . A more useful approach is to determine estimates of the rate and order of convergence over some range of iterations that are sufficiently accurate. Rewriting () as

suggests introducing the distance between successive iterates , and considering the condition

As an example, consider the derivative of at , as given by the calculus definition

and construct a sequence of approximations

Start with a numerical experiment, and compute the sequence .

Investigation of the numerical results indicates increasing accuracy in the estimate of , with decreasing step size . The distance between successive approximation sequence terms also decreases. It is more intuitive to analyze convergence behavior through a plot rather than a numerical table.

The intent of the rate and order of approximation definitions is to state that the distance between successive terms behaves as

in the hope that this is a Cauchy sequence, and successively closer terms actually indicate convergence. The convergence parameters can be isolated by taking logarithms, leading to a linear dependence

Subtraction of successive terms gives , leading to an average slope estimate

The above computations indicate , known as linear convergence. Figure b shows the common practice of depicting guide lines of slope 1 (black) and slope 2 (green) to visually ascertain the rate of convergence. Once the order of approximation is determined, the rate of aproximation is estimated from

The above results suggest successive approximants become closer spaced according to

Repeat the above experiment at , where , and using a different approximation of the derivative

For this experiment, in addition to the rate and order of approximation , also determine the rate and order of convergence using

Given some approximation sequence , , with solution of problem , it is of interest to construct a more rapidly convergent sequence , . Knowledge of the order of convergence can be used to achieve this purpose by writing

and taking the ratio to obtain

For , the above is a polynomial equation of degree that can be solved to obtain . The heuristic approximation () suggests a new approximation of the exact limit obtained by solving ().

One of the widely used acceleration techniques was published by Aitken (1926, but had been in use since Medieval times) for in which case () gives

The above suggests that starting from , the sequence with

might converge faster towards the limit. Investigate by revisiting the numerical experiment on approximation of the derivative of at , using

Analysis reinforces the above numerical experiment. First-order convergence implies the distance to the limit decreases during iteration as

Several approaches may be used in construction of an approximating sequence . The approaches exemplified below for , can be generalized when is some other type of mathematical object.

Returning to the Leibniz series

the sequence of approximations is with general term

Note that successive terms are obtained by an additive correction

Another example, again giving an approximation of is the Srinivasa Ramanujan series

that can be used to obtain many digits of accuracy with just a few terms.

An example of the generalization of this approach is the Taylor series of a function. For example, the familiar sine power series

is analogous, but with rationals now replaced by monomials, and the limit is now a function . The general term is

and the same type of additive correction appears, this time for functions,

Approximating sequences need not be constructed by adding a correction. Consider the approximation of given by Wallis's product (1656)

for which

Another famous example is the Viète formula from 1593

in which the correction is multiplicative with numerators given by nested radicals. Similar to the symbol for addition, and the symbol for multiplication, the N symbol is used to denote nested radicals

In the case of the Viète formula, , for all .

Yet another alternative is that of continued fractions, with one possible approximation of given by

A notation is introduced for continued fractions using the symbol

Using this notation, the sequences arising in the continued fraction representation of are , chosen as for , and for .

The above correction techniques used arithmetic operations. The repeated radical coefficients in the Viète formula suggest consideration of repeated composition of arbitrary functions to construct the approximant

insect

This is now a general framework, in which all of the preceeding correction approaches can be expressed. For example, the continued fraction formula () is recovered through the functions

and evaluation of the composite function at

This general framework is of significant current interest since such composition of nonlinear functions is the basis of deep neural network approximations.

The cornerstone of scientific computing is construction of approximating sequences.

The problem of number approximation leads to definition of concepts and techniques that can be extended to more complex mathematical objects.

A primary objective is the construction of efficient approximating sequences, with efficiency characterized through concepts such as order and speed of convergence.

Though often enforced analytically, limiting behavior of the sequence is of secondary interest. As seen in the approximation of a derivative, the approximating sequence might diverge, yet give satisfactory answers for some range of indices.

Though by far the most widely studied and used approach to approximation, additive corrections are not the only possibility.

Alternative correction techniques include: multiplication, continued fractions, or repeated function composition.

Repeated composition of functions is used in constructing deep neural network approximants.

In general, mathematical problems can be thought of as mappings from some set of inputs to some set of outputs . The mapping is often carried out through a function , i.e., a procedure that associates a single to some input

Examples:

Note that the specification of a mathematical problem requires definition of the triplet .

Once a problem is specified, the natural question is to ascertain whether a solution is possible. Generally, simple affirmation of the existence of a solution is the objective of some field of mathematics (e.g., analysis, functional analysis). From the point of view of science, an essential question is not only existence but also:

how does the output change if changes?

what are the constructive methods to approximate ?

The above general definition of a mathematical problem must be refined in order to assess magnitude of changes in inputs or outputs. A first step is to introduce some structure in the input and output sets . Using these sets, vector spaces are constructed, consisting of a set of vectors , a set of scalars , an addition operation , and a scaling operation . The vector space is often referred to simply by its set of vectors , when the set of scalars, addition operation, and scaling operation are self-evident in context.

Formally,a vector space is defined by a set whose elements

satisfy certain scaling and addition properties, denoted all together

by the 4-tuple . The first element the 4-tuple is a set whose elements

are called vectors. The second element is a set of scalars,

and the third is the vector addition operation. The last is the

scaling operation, seen as multiplication of a vector by a scalar.

The vector addition and scaling operations must satisfy rules

suggested by positions or forces in three-dimensional space, which are

listed in Table . In particular, a vector space requires definition of

two distinguished elements: the zero vector , and the identity scalar

element .

A first step is quantification of the changes in input or output, assumed to have the structure of a vector space, .

The ratio of changes in output to changes in input is the absolute condition number of a problem.

To avoid influence of choice of reference unit, the relative condition number is also introduced.

In scientific computation, the mathematical problem is approximated by an algorithm , in which is assumed to be computable, and are vector spaces that approximate . As a first step in characterizing how well the algorithm approximates the problem , consider that and , i.e., there is no error in representation of the domain and codomain.

The above condition is also denoted as

Algorithms should not catastrophically increase input errors. This is quantified in the concept of stability.

The above states that the relative error in the output should be on the order of machine epsilon if the relative in the input is of order machine epsilon. Note that the constants in the order statements are usually different from one another, ,

Backward stability asserts that the result of the algorithm on exact input data is the same as the solution to the mathematical problem for nearby data (with distance on order of machine epsilon).

Mathematical problems are stated as functions from a set of inputs to a set of outputs ,

The difficulty of a mathematical problem is assessed by measuring the effect of changes in input

To quantify changes in inputs and outputs, the framework of a normed vector space is introduced

The ratio of norm of output change to norm of input change is the absolute condition number of a problem

Algorithms are constructive approximations of mathemtical problems . The accuracy of an algorithm is assessed by comparison of the algorithm output to that of the mathematical problem through absolute error and relative error

The tendency of an algorithm to amplify pertubations of input is assessed by the concept of stability

Algorithms that do not amplify relative changes in input of the size of machine precision are forward stable.

Algorithms that compute the exact result of a mathematical problem for changes in put of the size of machine precision are backward stable.

The definition from Table 1 of a vector space reflects everyday

experience with vectors in Euclidean geometry, and it is common to

refer to such vectors by descriptions in a Cartesian coordinate

system. For example, a position vector within the plane can be

referred through the pair of coordinates . This intuitive

understanding can be made precise through the definition of a vector

space , called the real 2-space. Vectors within are elements of ,

meaning that a vector is specified through two real numbers, .

Addition of two vectors, , is defined by addition of coordinates .

Scaling by scalar is defined by . Similarly, consideration of

position vectors in three-dimensional space leads to the definition of

the , or more generally a real -space , , .

Note however that there is no mention of coordinates in the definition of a vector space as can be seen from the list of properties in Table 1. The intent of such a definition is to highlight that besides position vectors, many other mathematical objects follow the same rules. As an example, consider the set of all continuous functions , with function addition defined by the sum at each argument , and scaling by defined as . Read this as: “given two continuous functions and , the function is defined by stating that its value for argument is the sum of the two real numbers and ”. Similarly: “given a continuous function , the function is defined by stating that its value for argument is the product of the real numbers and ”. Under such definitions is a vector space, but quite different from . Nonetheless, the fact that both and are vector spaces can be used to obtain insight into the behavior of continuous functions from Euclidean vectors, and vice versa. This correspondence principle between discrete and continuous formulations is a recurring theme in scientific computation.

Since the real spaces play such an important role in themselves and as a guide to other vector spaces, familiarity with vector operations in is necessary to fully appreciate the utility of linear algebra to a wide range of applications. Following the usage in geometry and physics, the real numbers that specify a vector are called the components of . The one-to-one correspondence between a vector and its components , is by convention taken to define an equality relationship,

with the components arranged vertically and enclosed in square brackets. Given two vectors , and a scalar , vector addition and scaling are defined in by real number addition and multiplication of components

The vector space is defined using the real numbers as the set of scalars, and constructing vectors by grouping together scalars, but this approach can be extended to any set of scalars , leading to the definition of the vector spaces . These will often be referred to as -vector space of scalars, signifying that the set of vectors is .

To aid in visual recognition of vectors, the following notation conventions are introduced:

vectors are denoted by lower-case bold Latin letters: ;

scalars are denoted by normal face Latin or Greek letters: ;

the components of a vector are denoted by the corresponding normal face with subscripts as in equation ();

related sets of vectors are denoted by indexed bold Latin letters: .

Instead of the vertical placement or components into one column, the components of could have been placed horizontally in one row , that contains the same data, differently organized. By convention vertical placement of vector components is the preferred organization, and shall denote a column vector henceforth. A transpose operation denoted by a superscript is introduced to relate the two representations

and is the notation used to denote a row vector.

Addition of real vectors defines another vector . The components of are the sums of the corresponding components of and , , for . Addition of vectors with different number of components is not defined, and attempting to add such vectors produces an error. Such vectors with different number of components are called incompatible, while vectors with the same number of components are said to be compatible. Scaling of by defines a vector , whose components are , for .

The vectors used in applications usually have a large number of components, , and it is important to become proficient in their manipulation. Previous examples defined vectors by explicit listing of their components. This is impractical for large , and support is provided for automated generation for often-encountered situations. First, observe that Table 1 mentions one distinguished vector, the zero element that is a member of any vector space . The zero vector of a real vector space is a column vector with components, all of which are zero, and a mathematical convention for specifying this vector is . This notation specifies that transpose of the zero vector is the row vector with zero components, also written through explicit indexing of each component as , for . Keep in mind that the zero vector and the zero scalar are different mathematical objects.

The ellipsis symbol in the mathematical notation is transcribed in Julia by the notion of a range, with 1:m denoting all the integers starting from to , organized as a row vector. The notation is extended to allow for strides different from one, and the mathematical ellipsis is denoted as m:-1:1. In general r:s:t denotes the set of numbers with , and real numbers and a natural number, , . If there is no natural number such that , an empty vector with no components is returned.

The expression expresses the idea of scaling vectors within a set and subsequent addition to form a new vector . The matrix groups these vectors together in a single entity, and the scaling factors are the components of the vector . To bring all these concepts together it is natural to consider the notation

as a generalization of the scalar expression . It is clear what the operation should signify: it should capture the vector scaling and subsequent vector addition . A specific meaning is now ascribed to by identifying two definitions to one another.

Repeateadly stating “vector scaling and subsequent vector addition” is unwieldy, so a special term is introduced for some given set of vectors .

Similar to the grouping of unit vectors into the identity matrix , a more concise way of referring to arbitrary vectors from the same vector space is the matrix . Combining these observations leads to the definition of a matrix-vector product.

Consider a simple example that leads to a common linear algebra problem: decomposition of forces in the plane along two directions. Suppose a force is given in terms of components along the Cartesian -axes, , as expressed by the matrix-vector multiplication Note that the same force could be obtained by linear combination of other vectors, for instance the normal and tangential components of the force applied on an inclined plane with angle , , as in Figure . This defines an alternate reference system for the problem. The unit vectors along these directions are

and can be combined into a matrix . The value of the components are the scaling factors and can be combined into a vector . The same force must result irrespective of whether its components are given along the Cartesian axes or the inclined plane directions leading to the equality

Interpret equation () to state that the vector could be obtained either as a linear combination of , , or as a linear combination of the columns of , . Of course the simpler description seems to be for which the components are already known. But this is only due to an arbitrary choice made by a human observer to define the force in terms of horizontal and vertical components. The problem itself suggests that the tangential and normal components are more relevant; for instance a friction force would be evaluated as a scaling of the normal force.

Linear combinations in a real space can suggest properties or approximations of more complex objects such as continuous functions. Let denote the vector space of continuous functions that are periodic on the interval , . Recall that vector addition is defined by , and scaling by , for , . Familiar functions within this vector space are , with , and these can be recognized to intrinsically represent periodicity on , a role analogous to the normal and tangential directions in the inclined plane example. Define now another periodic function by repeating the values from the interval on all intervals , for . The function is not given in terms of the “naturally” periodic functions , , but could it thus be expressed? This can be stated as seeking a linear combination as studied in Fourier analysis. The coefficients could be determined from an analytical formula involving calculus operations but we'll seek an approximation using a linear combination of terms

Organize this as a matrix vector product , with

The idea is to sample the column vectors of at the components of the vector , , , . Let , and , denote the so-sampled functions leading to the definition of a vector and a matrix . There are coefficients available to scale the column vectors of , and has components. For it is generally not possible to find such that would exactly equal , but as seen later the condition to be as close as possible to leads to a well defined solution procedure. This is known as a least squares problem and is automatically applied in the x=A\b instruction when the matrix A is not square. As seen in the following numerical experiment and Figure , the approximation is excellent and the information conveyed by samples of is now much more efficiently stored in the form chosen for the columns of and the scaling coefficients that are the components of .

A widely used framework for constructing additive approximations is the vector space algebraic space structure in which scaling and addition operations are defined

In a vector space linear combinations are used to construct more complicated objects from simpler ones

A general procedure to relate input values from set to output values from set is to first construct the set of all possible instances of and , which is the Cartesian product of with , denoted as . Usually only some associations of inputs to outputs are of interest leading to the following definition.

Associating an output to an input is also useful, leading to the definition of an inverse relation as , . Note that an inverse exists for any relation, and the inverse of an inverse is the original relation, .

Many types of relations are defined in mathematics and encountered in linear algebra. A commonly encountered type of relationship is from a set onto itself, known as a homogeneous relation. For homogeneous relations , it is common to replace the set membership notation to state that is in relationship with , with a binary operator notation . Familiar examples include the equality and less than relationships between reals, , in which is replaced by , and is replaced by . The equality relationship is its own inverse, and the inverse of the less than relationship is the greater than relation , , . Homogeneous relations are classified according to the following criteria.

Relation is reflexive if for any . The equality relation is reflexive, , the less than relation is not, .

Relation is symmetric if implies that , . The equality relation is symmetric, , the less than relation is not, .

Relation is anti-symmetric if for , then . The less than relation is antisymmetric, .

Relation is transitive if and implies . for any . The equality relation is transitive, , as is the less than relation , .

Certain combinations of properties often arise. A homogeneous relation that is reflexive, symmetric, and transitive is said to be an equivalence relation. Equivalence relations include equality among the reals, or congruence among triangles. A homogeneous relation that is reflexive, anti-symmetric and transitive is a partial order relation, such as the less than or equal relation between reals. Finally, a homogeneous relation that is anti-symmetric and transitive is an order relation, such as the less than relation between reals.

Functions between sets and are a specific type of relationship that often arise in science. For a given input , theories that predict a single possible output are of particular scientific interest.

The above intuitive definition can be transcribed in precise mathematical terms as is a function if and implies . Since it's a particular kind of relation, a function is a triplet of sets , but with a special, common notation to denote the triplet by , with and the property that . The set is the domain and the set is the codomain of the function . The value from the domain is the argument of the function associated with the function value . The function value is said to be returned by evaluation .

As seen previously, a Euclidean space can be used to suggest properties of more complex spaces such as the vector space of continuous functions . A construct that will be often used is to interpret a vector within as a function, since with components also defines a function , with values . As the number of components grows the function can provide better approximations of some continuous function through the function values at distinct sample points .

The above function examples are all defined on a domain of scalars or naturals and returned scalar values. Within linear algebra the particular interest is on functions defined on sets of vectors from some vector space that return either scalars , or vectors from some other vector space , . The codomain of a vector-valued function might be the same set of vectors as its domain, . The fundamental operation within linear algebra is the linear combination with , . A key aspect is to characterize how a function behaves when given a linear combination as its argument, for instance or

Consider first the case of a function defined on a set of vectors that returns a scalar value. These can be interpreted as labels attached to a vector, and are very often encountered in applications from natural phenomena or data analysis.

Many different functionals may be defined on a vector space , and an insightful alternative description is provided by considering the set of all linear functionals, that will be denoted as . These can be organized into another vector space with vector addition of linear functionals and scaling by defined by

As is often the case, the above abstract definition can better be understood by reference to the familiar case of Euclidean space. Consider , the set of vectors in the plane with the position vector from the origin to point in the plane with coordinates . One functional from the dual space is , i.e., taking the second coordinate of the position vector. The linearity property is readily verified. For , ,

Given some constant value , the curves within the plane defined by are called the contour lines or level sets of . Several contour lines and position vectors are shown in Figure . The utility of functionals and dual spaces can be shown by considering a simple example from physics. Assume that is the height above ground level and a vector is the displacement of a body of mass in a gravitational field. The mechanical work done to lift the body from ground level to height is with the gravitational acceleration. The mechanical work is the same for all displacements that satisfy the equation . The work expressed in units can be interpreted as the number of contour lines intersected by the displacement vector . This concept of duality between vectors and scalar-valued functionals arises throughout mathematics, the physical and social sciences and in data science. The term “duality” itself comes from geometry. A point in with coordinates can be defined either as the end-point of the position vector , or as the intersection of the contour lines of two functionals and . Either geometric description works equally well in specifying the position of , so it might seem redundant to have two such procedures. It turns out though that many quantities of interest in applications can be defined through use of both descriptions, as shown in the computation of mechanical work in a gravitational field.

Consider now functions from vector space to another vector space . As before, the action of such functions on linear combinations is of special interest.

The image of a linear combination through a linear mapping is another linear combination , and linear mappings are said to preserve the structure of a vector space, and called homomorphisms in mathematics. The codomain of a linear mapping might be the same as the domain in which case the mapping is said to be an endomorphism.

Matrix-vector multiplication has been introduced as a concise way to specify a linear combination

with the columns of the matrix, . This is a linear mapping between the real spaces , , , and indeed any linear mapping between real spaces can be given as a matrix-vector product.

Vectors within the real space can be completely specified by real numbers, even though is large in many realistic applications. A vector within , i.e., a continuous function defined on the reals, cannot be so specified since it would require an infinite, non-countable listing of function values. In either case, the task of describing the elements of a vector space by simpler means arises. Within data science this leads to classification problems in accordance with some relevant criteria.

Many classification criteria are scalars, defined as a scalar-valued function on a vector space, . The most common criteria are inspired by experience with Euclidean space. In a Euclidean-Cartesian model of the geometry of a plane , a point is arbitrarily chosen to correspond to the zero vector , along with two preferred vectors grouped together into the identity matrix . The position of a point with respect to is given by the linear combination

Several possible classifications of points in the plane are depicted in Figure : lines, squares, circles. Intuitively, each choice separates the plane into subsets, and a given point in the plane belongs to just one in the chosen family of subsets. A more precise characterization is given by the concept of a partition of a set.

In precise mathematical terms, a partition of set is such that , for which . Since there is only one set ( signifies “exists and is unique”) to which some given belongs, the subsets of the partition are disjoint, . The subsets are labeled by within some index set . The index set might be a subset of the naturals, in which case the partition is countable, possibly finite. The partitions of the plane suggested by Figure are however indexed by a real-valued label, with .

A technique which is often used to generate a partition of a vector space is to define an equivalence relation between vectors, . For some element , the equivalence class of is defined as all vectors that are equivalent to , . The set of equivalence classes of is called the quotient set and denoted as , and the quotient set is a partition of . Figure depicts four different partitions of the plane. These can be interpreted geometrically, such as parallel lines or distance from the origin. With wider implications for linear algebra, the partitions can also be given in terms of classification criteria specified by functions.

The partition of by circles from Figure is familiar; the equivalence classes are sets of points whose position vector has the same size, , or is at the same distance from the origin. Note that familiarity with Euclidean geometry should not obscure the fact that some other concept of distance might be induced by the data. A simple example is statement of walking distance in terms of city blocks, in which the distance from a starting point to an address blocks east and blocks north is city blocks, not the Euclidean distance since one cannot walk through the buildings occupying a city block.

The above observations lead to the mathematical concept of a norm as a tool to evaluate vector magnitude. Recall that a vector space is specified by two sets and two operations, , and the behavior of a norm with respect to each of these components must be defined. The desired behavior includes the following properties and formal definition.

The magnitude of a vector should be a unique scalar, requiring the definition of a function. The scalar could have irrational values and should allow ordering of vectors by size, so the function should be from to , . On the real line the point at coordinate is at distance from the origin, and to mimic this usage the norm of is denoted as , leading to the definition of a function , .

Provision must be made for the only distinguished element of , the null vector . It is natural to associate the null vector with the null scalar element, . A crucial additional property is also imposed namely that the null vector is the only vector whose norm is zero, . From knowledge of a single scalar value, an entire vector can be determined. This property arises at key junctures in linear algebra, notably in providing a link to another branch of mathematics known as analysis, and is needed to establish the fundamental theorem of linear algbera or the singular value decomposition encountered later.

Transfer of the scaling operation property leads to imposing . This property ensures commensurability of vectors, meaning that the magnitude of vector can be expressed as a multiple of some standard vector magnitude .

Position vectors from the origin to coordinates on the real line can be added and . If however the position vectors point in different directions, , , then . For a general vector space the analogous property is known as the triangle inequality, for .

Note that the norm is a functional, but the triangle inequality implies that it is not generally a linear functional. Returning to Figure , consider the functions defined for through values

Sets of constant value of the above functions are also equivalence classes induced by the equivalence relations for .

, ;

, ;

, ;

, .

These equivalence classes correspond to the vertical lines, horizontal lines, squares, and circles of Figure . Not all of the functions are norms since is zero for the non-null vector , and is zero for the non-null vector . The functions and are indeed norms, and specific cases of the following general norm.

Denote by the largest component in absolute value of . As increases, becomes dominant with respect to all other terms in the sum suggesting the definition of an inf-norm by

This also works for vectors with equal components, since the fact that the number of components is finite while can be used as exemplified for , by , with .

Note that the Euclidean norm corresponds to , and is often called the -norm. The analogy between vectors and functions can be exploited to also define a -norm for , the vector space of continuous functions defined on .

The integration operation can be intuitively interpreted as the value of the sum from equation () for very large and very closely spaced evaluation points of the function , for instance . An inf-norm can also be define for continuous functions by

where sup, the supremum operation can be intuitively understood as the generalization of the max operation over the countable set to the uncountable set .

Norms are functionals that define what is meant by the size of a vector, but are not linear. Even in the simplest case of the real line, the linearity relation is not verified for , . Nor do norms characterize the familiar geometric concept of orientation of a vector. A particularly important orientation from Euclidean geometry is orthogonality between two vectors. Another function is required, but before a formal definition some intuitive understanding is sought by considering vectors and functionals in the plane, as depicted in Figure . Consider a position vector and the previously-encountered linear functionals

The component of the vector can be thought of as the number of level sets of times it crosses; similarly for the component. A convenient labeling of level sets is by their normal vectors. The level sets of have normal , and those of have normal vector . Both of these can be thought of as matrices with two columns, each containing a single component. The products of these matrices with the vector gives the value of the functionals

In general, any linear functional defined on the real space can be labeled by a vector

and evaluated through the matrix-vector product . This suggests the definition of another function ,

The function is called an inner product, has two vector arguments from which a matrix-vector product is formed and returns a scalar value, hence is also called a scalar product. The definition from an Euclidean space can be extended to general vector spaces. For now, consider the field of scalars to be the reals .

The inner product returns the number of level sets of the functional labeled by crossed by the vector , and this interpretation underlies many applications in the sciences as in the gravitational field example above. Inner products also provide a procedure to evaluate geometrical quantities and relationships.

In the number of level sets of the functional labeled by crossed by itself is identical to the square of the 2-norm

In general, the square root of satisfies the properties of a norm, and is called the norm induced by an inner product

A real space together with the scalar product and induced norm defines an Euclidean vector space .

In the point specified by polar coordinates has the Cartesian coordinates , , and position vector . The inner product

is seen to contain information on the relative orientation of with respect to . In general, the angle between two vectors with any vector space with a scalar product can be defined by

which becomes

in a Euclidean space, .

In two vectors are orthogonal if the angle between them is such that , and this can be extended to an arbitrary vector space with a scalar product by stating that are orthogonal if . In vectors are orthogonal if .

From two functions and , a composite function, , is defined by

Consider linear mappings between Euclidean spaces , . Recall that linear mappings between Euclidean spaces are expressed as matrix vector multiplication

The composite function is , defined by

Note that the intemediate vector is subsequently multiplied by the matrix . The composite function is itself a linear mapping

so it also can be expressed a matrix-vector multiplication

Using the above, is defined as the product of matrix with matrix

The columns of can be determined from those of by considering the action of on the the column vectors of the identity matrix . First, note that

The above can be repeated for the matrix giving

Combining the above equations leads to , or

Summary.

Linear functionals attach a scalar label to a vector, and preserve linear combinations

Linear functionals arise when establish vector magnitude and orientation

Linear mappings establish correspondences between vector spaces and preserve linear combinations

Composition of linear mappings is represented through matrix multiplication

A central interest in scientific computation is to seek simple descriptions of complex objects. A typical situation is specifying an instance of some object of interest through an -tuple with large . Assuming that addition and scaling of such objects can cogently be defined, a vector space is obtained, say over the field of reals with an Euclidean distance, . Examples include for instance recordings of medical data (electroencephalograms, electrocardiograms), sound recordings, or images, for which can easily reach into the millions. A natural question to ask is whether all the real numbers are actually needed to describe the observed objects, or perhaps there is some intrinsic description that requires a much smaller number of descriptive parameters, that still preserves the useful idea of linear combination. The mathematical transcription of this idea is a vector subspace.

The above states a vector subspace must be closed under linear combination, and have the same vector addition and scaling operations as the enclosing vector space. The simplest vector subspace of a vector space is the null subspace that only contains the null element, . In fact any subspace must contain the null element , or otherwise closure would not be verified for the particular linear combination . If , then is said to be a proper subspace of , denoted by .

Vector subspaces arise in decomposition or partitioning of a vector space. The converse, composition of vector spaces , is defined in terms of linear combination. A vector can be obtained as the linear combination

but also as

for some arbitrary . In the first case, is obtained as a unique linear combination of a vector from the set with a vector from . In the second case, there is an infinity of linear combinations of a vector from with another from to the vector . This is captured by a pair of definitions to describe vector space composition.

In the previous example, the essential difference between the two ways to express is that , but , and in general if the zero vector is the only common element of two vector spaces then the sum of the vector spaces becomes a direct sum.In practice, the most important procedure to construct direct sums or check when an intersection of two vector subspaces reduces to the zero vector is through an inner product.

The above concept of orthogonality can be extended to other vector subspaces, such as spaces of functions. It can also be extended to other choices of an inner product, in which case the term conjugate vector spaces is sometimes used. The concepts of sum and direct sum of vector spaces used linear combinations of the form . This notion can be extended to arbitrary linear combinations.

Note that for real vector spaces a member of the span of the vectors is the vector obtained from the matrix vector multiplication

From the above, the span is a subset of the co-domain of the linear mapping .

The wide-ranging utility of linear algebra results from a complete characterization of the behavior of a linear mapping between vector spaces , . For some given linear mapping the questions that arise are:

Can any vector within be obtained by evaluation of ?

Is there a single way that a vector within can be obtained by evaluation of ?

Linear mappings between real vector spaces , have been seen to be completely specified by a matrix . It is common to frame the above questions about the behavior of the linear mapping through sets associated with the matrix . To frame an answer to the first question, a set of reachable vectors is first defined.

By definition, the column space is included in the co-domain of the function , , and is readily seen to be a vector subspace of . The question that arises is whether the column space is the entire co-domain that would signify that any vector can be reached by linear combination. If this is not the case then the column space would be a proper subset, , and the question is to determine what part of the co-domain cannot be reached by linear combination of columns of . Consider the orthogonal complement of defined as the set vectors orthogonal to all of the column vectors of , expressed through inner products as

This can be expressed more concisely through the transpose operation

and leads to the definition of a set of vectors for which

Note that the left null space is also a vector subspace of the co-domain of , . The above definitions suggest that both the matrix and its transpose play a role in characterizing the behavior of the linear mapping , so analagous sets are define for the transpose .

The above low dimensional examples are useful to gain initial insight into the significance of the spaces . Further appreciation can be gained by applying the same concepts to processing of images. A gray-scale image of size by pixels can be represented as a vector with components, . Even for a small image with pixels along each direction, the vector would have components. An image can be specified as a linear combination of the columns of the identity matrix

with the gray-level intensity in pixel . Similar to the inclined plane example from §1, an alternative description as a linear combination of another set of vectors might be more relevant. One choice of greater utility for image processing mimics the behavior of the set that extends the second example in §1, would be for

For the simple scalar mapping , , the condition implies either that or . Note that can be understood as defining a zero mapping . Linear mappings between vector spaces, , can exhibit different behavior, and the condtion , might be satisfied for both , and . Analogous to the scalar case, can be understood as defining a zero mapping, .

In vector space , vectors related by a scaling operation, , , are said to be colinear, and are considered to contain redundant data. This can be restated as , from which it results that . Colinearity can be expressed only in terms of vector scaling, but other types of redundancy arise when also considering vector addition as expressed by the span of a vector set. Assuming that , then the strict inclusion relation holds. This strict inclusion expressed in terms of set concepts can be transcribed into an algebraic condition.

Introducing a matrix representation of the vectors

allows restating linear dependence as the existence of a non-zero vector, , such that . Linear dependence can also be written as , or that one cannot deduce from the fact that the linear mapping attains a zero value that the argument itself is zero. The converse of this statement would be that the only way to ensure is for , or , leading to the concept of linear independence.

Vector spaces are closed under linear combination, and the span of a vector set defines a vector subspace. If the entire set of vectors can be obtained by a spanning set, , extending by an additional element would be redundant since . This is recognized by the concept of a basis, and also allows leads to a characterization of the size of a vector space by the cardinality of a basis set.

The domain and co-domain of the linear mapping , , are decomposed by the spaces associated with the matrix . When , , the following vector subspaces associated with the matrix have been defined:

the column space of

the row space of

the null space of

the left null space of , or null space of

A partition of a set has been introduced as a collection of subsets such that any given element belongs to only one set in the partition. This is modified when applied to subspaces of a vector space, and a partition of a set of vectors is understood as a collection of subsets such that any vector except belongs to only one member of the partition.

Linear mappings between vector spaces can be represented by matrices with columns that are images of the columns of a basis of

Consider the case of real finite-dimensional domain and co-domain, , in which case ,

The column space of is a vector subspace of the codomain, , but according to the definition of dimension if there remain non-zero vectors within the codomain that are outside the range of ,

All of the non-zero vectors in , namely the set of vectors orthogonal to all columns in fall into this category. The above considerations can be stated as

The question that arises is whether there remain any non-zero vectors in the codomain that are not part of or . The fundamental theorem of linear algebra states that there no such vectors, that is the orthogonal complement of , and their direct sum covers the entire codomain .

In the vector space the subspaces are said to be orthogonal complements if , and . When , the orthogonal complement of is denoted as , .

Consideration of equality between sets arises in proving the above theorem. A standard technique to show set equality , is by double inclusion, . This is shown for the statements giving the decomposition of the codomain . A similar approach can be used to decomposition of .

(column space is orthogonal to left null space).

( is the only vector both in and ).

The remainder of the FTLA is established by considering , e.g., since it has been established in (v) that , replacing yields , etc.

Summary.

Vector subspaces are subsets of a vector space closed under linear combination

The simplest vector subspace is

Linear mappings are represented by matrices

Associated with matrix that represents mapping are four fundamental subspaces:

the column space of containing vectors reachable by ,

the left null space of containing vectors orthogonal to columns ,

the row space of

the null space of

A vector space can be formed from all linear mappings from the vector space to another vector space

with addition and scaling of linear mappings defined by and . Let denote a basis for the domain of linear mappings within , such that the linear mapping is represented by the matrix

When the domain and codomain are the real vector spaces , , the above is a standard matrix of real numbers, . For linear mappings between infinite dimensional vector spaces, the matrix is understood in a generalized sense to contain an infinite number of columns that are elements of the codomain . For example, the indefinite integral is a linear mapping between the vector space of functions that allow differentiation to any order,

and for the monomial basis , is represented by the generalized matrix

Truncation of the MacLaurin series with to terms, and sampling of at points , forms a standard matrix of real numbers

Values of function at are approximated by

with denoting the coordinates of in basis . The above argument states that the coordinates of , the primitive of are given by

as can be indeed verified through term-by-term integration of the MacLaurin series.

As to be expected, matrices can also be organized as vector space , which is essentially the representation of the associated vector space of linear mappings,

The addition and scaling of matrices is given in terms of the matrix components by